Leaderboard

Popular Content

Showing content with the highest reputation on 2021-02-18 in all areas

-

5 points

-

INTRO: To recap, the "psychic" shader is abandoned; it will never happen. During discussions in that thread, it was agreed that the way to go is to complete the eye-candy "metal detecting" shader (which now detects human skin, as well, and applies dielectric shimmer to it) and keep it as a better shader for existing art assets. This shader is now complete and ready for review and adoption. I include here how I described it in the D3555 update: The Next Shader last mentioned is the subject of this forum topic. New art assets will NOT need re-interpretation by the shader; no need for "detections" of materials; and it will embrace a more comprehensive texture stack capable of specifying all the important parameters needed to describe a material, such as metal/non-metal, metallic specularity in the case of a metal; index of refraction and surface purity in the case of a non-metal. We should also make sure that each channel has appropriate bit depth as per the criticality of its value accuracy or resolution. For example, specular power, in my experience, is a parameter well worth of its own texture channel, and it needs good resolution, as our eyes are quite sensitive to subtle changes in specular power across a surface. Other channels are less critical, such as rgb diffuse color. Another goal I'm setting for myself, with the new shader(s) is to reduce their number by making them capable of serving all the needs provided by a multitude of shaders presently. Another goal of mine is to get rid of as many conditional compilation switches from the shaders, with the following criteria: Conditional compilation is okay to have when it pertains to user settings in graphics options, which are settings affecting all materials generally. Conditional compilation is NOT okay to change compilation per-object or per-draw call, based on object parameters. Why? For one thing, the confusing rats' nest of switches in the shader becomes intractable. For another, it is effectively changing the shader from one call to the next, causing shader reloads, which are expensive in terms of performance. My tentative ambition is to produce two shaders: one for solids, and one for transparencies (excluding water; the current water shader is beautiful; I mean "glass" literally). SHADER CAPABILITIES (FOR ARTISTS): Without getting too technical, the "Next Shader" we call it for now, will be able to depict a wide range of materials realistically. If you want a vase made of terracotta, there will be a way to produce a terracotta look. If you want a simple paint, or a high gloss paint, or a painted surface with a clear varnish on top, you will be able to specify that exactly. The look of glossy plant leaves, the natural sheen of human skin in the sun, all of these will be distinctly representable. Cotton clothes, silk clothes, leather, granite... And there will be a huge library of materials that all artists can draw from, so that all artists are working on an equal footing vis a vis, for example, the albedo of the the assets produced. For an example of where albedo is not consistent, at present, Ptolemaic women have a good skin tone, but Greek and Roman women's skins are so white they saturate when they are in the sun, even as other assets look too dark. This kind of inconsistency will be avoided. You may notice I have not mentioned metals. The reason for that is that metals CAN be represented correctly by the current shaders using diffuse and specular colors, but hardly a soul on this planet knows how to do it right. So metallic representation will not be a "new" capability, per se; but inclusion of most metals in the materials library will be. And the most important feature of this shader is that it will be physics- and optics-based; not a bunch of manually adjusted steampunk data pipes and hacks. However, it is important to state what it will NOT be capable of: Although it will try to have some clever tricks to achieve things like specular occlusion (reflections of objects blocking the environment), it is not intended to be a ray-tracing shader. It will NOT use auto-updating environment boxes with moving ground reflections or anything of the sort. There's better things to spend GPU cycles on. The ground reflection will just be a flat color. It will NOT feature sub-surface scattering. Too expensive, and there are some cheap hacks that can be made to look almost like SSS. It will NOT feature second light bounces. Too expensive for what it's worth. It will NOT include things like hair or other complex material-specific shading. It will NOT support anisotropic filtering. Vynil records had not been invented yet, in the first century, anyways Possible new features: Not only shadows (as the present shader does) but also environmental shadowing (this may need props). Detail textures: These are textures that typically contain tile-able noise, and which are mapped to repeat over large surfaces, such as a map, modulating the diffuse texture, or the normalmap, or the AO, at a very low gain, almost unnoticeable, but make it seem like the textures used are of much greater resolution than they are. That's why they are called "detail textures; they would seem to "add detail". Very effective trick, but they need to have their gain modulated from the main texture via a texture channel, otherwise their monotony can make them noticeable. Long story. Possible test version of the shader, for artists, that detects impossible or unlikely material representations and shows them red or purple on the screen. Possibly have HDR... One thing this shader will NOT indulge on: Screen-space ambient occlusion. These are algorithms that produce a fake hint of ambient occlusion by iterative screen-space blurs of Z depth -tricks. They are HACKS. In my next post I will discuss texture packing, and give a few random examples for how materials will be represented. OTHER... I paste below a couple of posts from the "psychic" shader as a way of not losing them, as they are relevant here: ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Problem Number 1: One issue that I seem to be the only guy in the world to ever have pondered, is the fact that the Sun is NOT a point-source; it has a size. I don't mean the real size, but the apparent size: its diameter, in degrees. If we were on Mercury, it would be 1.4 degrees. From Venus, (if you could see through the darned clouds), it would be 0.8 deg. From Mars it looks a tiny 0.4 degrees diameter. From Earth: 0.53 degrees. This should be taken into account in Phong specular shading. The Phong light spot distribution (which is a hack; it is NOT physics-based) is (R dot V)^n, where R is the reflection vector, V is the view vector, the dot operation yields the cosine of the angle between them, and n is the specular power of the surface, where 5 is very rough, 50 is kind of egg-shell, and 500 is pretty smooth. Given an angle between between our eye vector and the ray of light reflecting from the spot we are looking at, if the alignment is perfect, the cosine of 0 degrees is 1, and so we see maximal reflection. If the angle is not zero, but it is small, say 1 degree, the cosine of 1 degree is 0.9998477, a very small decrement from 1.0, but if the specular power of the material finish is 1000 (very polished), the reflection at 1 degree will fall by 14% from the 0 degree spot-on. But with a perfect mirror, --a spec-power of infinity--, a 1 degree deviation (or any deviation at all) causes the reflected light to fall to zero. But that is assuming a point-source light... If what is reflecting off a surface is not a point source, however, the minimum specular highlight spot size is the size of our light source. This can be modeled by limiting our specular power to the power that would produce that same spot size from a point source. But this limiting should be smooth; not sharp... Problem Number 2: This is horrendous graphical mistake I keep seeing again and again: As the specular power of a surface finish varies, specular spotlights change in size, and that is correct; but the intensity of the light should vary with the inverse of the spotlight's size in terms of solid angle. If the reflected light is not so modulated, it means that a rough surface reflects more light (more Watts or Candelas) than a smooth surface, all other things being the same, which is absurd. As the specular spots get bigger, they should get dimmer; as they get smaller, they should get brighter. But the question will immediately come up: "Won't that cause saturation for small spot-lights?" The answer is yes, of course it may. So what? That's not the problem of the optics algorithm; it is a hardware limitation, and there are many ways to deal with it... You can take a saturated, super-bright reflection of the sun off a sword, and spread it around the screen like a lens-flare; you can dim the whole screen to simulate your own temporary blindness... Whatever we do, it is an after-processing; it is not the business of the rendering pipeline to take care of hardware limitations. Our light model is linear, as it should be, and as physics-based as we can get away with. If a light value is 100 times greater than the screen can display, so be it! Looking at the reflection of the sun off a chromed, smooth surface is not much less bright than looking at the sun directly. Of course it cannot be represented. Again, so what?! So the question is, how much should we set the brightness multiplier as specular power goes up? And also, at what specular power should the brightness multiplier be 1? Research: The two problems have a common underlying problem, namely, finding formulas that relate specular powers to spot sizes, where the latter need to be expressed in terms of conical half-angle and solid angle. If we define the "size" of a specular highlight as the angle at which the reflection's intensity falls by 50%, then for spec power = 1, using Phong shading, (R dot V)^n, the angle is where R dot V falls to 0.5. R dot V is the cosine of the angle, so the angle is, SpotlightRadius(power=1) = arccos( 0.5 ) = 60 degrees. Note that the distribution is equivalent to diffuse shading, except that diffuse shading falls to half intensity at 60 degrees from the spot where the surface normal and the light vector align, whereas a specular power of 1 spotlight falls to half intensity at 60 degrees from the mid vector between the light and view vectors, to the surface normal. But the overall distributions are equivalent. We can right away answer one of our questions above, and say that, Specular power of 1.0 should have a light adjustment multiplier of 1.0 How this multiplier should increase with specular power is yet to be found... But so, to continue, what should be our formula for spotlight radius as a function of specular power? For a perfectly reflective material, Ispec/Iincident = (R dot V)^n If we care about the 50% fall-off point, we can write, 0.5 = (R dot V)^n (R dot V) = 0.5^(1/n) So our spot size, in radians radius terms SpotRadius = arccos( 0.5^(1/n) ) We are making progress! Now, specular power to solid angle: Measured in steradians, the formula for solid angle from cone half-angle (radial angle) is, omega = 2pi * (1 - cos(tetha)) But there are 2pi steradians in a hemisphere, so, measured in hemispheres, the formula becomes, omega = 1 - cos(tetha) If we substitute our spot radius formula above, we get omega = 1 - cos( arccos( 0.5^(1/n) ) ) which simplifies to, SpotSizeInHemispheres = 1 - 0.5^(1/n) where n is the specular power. Now we are REALLY making progress... Our adjustment factor for specular spotlights should be inversely proportional to the solid angle of the spots, so, AdjFactor = k * 1 / ( 1 - 0.5^(1/n) ) with a k to be determined such that AdjFactor is 1 when spec power is 1. What does our right-hand side yield at power 1? 1/1 = 1. 0.5^1 = 0.5. 1 - 0.5 = 0.5. 1/0.5 = 2. So k needs to be 0.5 So, our final formula is, BrightnessAdjustmentFactor = 0.5 / ( 1 - 0.5^(1/n) ) where n is specular power. Almost done. One final magic ring we need to find is what is the shininess equivalent for the Sun's apparent size. We know that its apparent diameter is 0.53 degrees. So, its apparent radius is 0.265 degrees. Multiply by pi/180 and... SunApparentRadius = 0.004625 radians Good to know, but we need a formula to translate that into a shininess equivalent. Well, we just need to flip our second formula around. We said, SpotRadius(radians) = arccos( 0.5^(1/n) ) so, cos( SpotRadius ) = 0.5^(1/n) ln( cos(SpotRadius) ) = ln( 0.5^(1/n) ) ln( cos(SpotRadius) ) = (1/n) * ln( 0.5 ) n = ln( 0.5 ) / ln( cos(SpotRadius) ) Plugging in our value, ln( 0.5 ) = -0.69314718 cos( 0.004625 radians ) = 0.99998930471 ln( cos( 0.004625 radians ) ) = -1.0695347 E-5 Finally, SunSizeSpecPwrEquiv = 64808 ... (make that 65k ) So, we really don't need to be concerned, except for ridiculously high spec power surfaces; but it's good to know, finally. EDIT: Just so you know, when I worked on this, decades ago, I obviously made a huge math error somewhere, and I ended up with a Sun size derived specular power limitation to about 70 I think it was. I smooth-limited incoming specular power by computing n = n*70/(n+70). I knew it was wrong; the spotlights on flat surfaces were huge. What was cool about it was the perfect circular shape of those highlights. It was like looking at a reflection of the Sun, literally; except that it was so big it looked like I was looking at this reflection through a telescope. EDIT2: One thing to notice here is the absurd non-linearity of the relevance of spec power; maybe we should consider encoding the inverse of the square root of spec power, instead. This way we have a way to express infinite (perfect surface; what's wrong with that?) by writing 0. We can express the maximum shimmery surface as 1, to get power 1. At 0.5 we get 4. At 0.1 we get 100. We could even encode fourth root of 1/n. Edited Sunday at 09:39 by DanW58 @hyperion Another way to go about it, that you might care to consider, is to incorporate this shader now (if it works with all existing assets), but to also include a new shader with NO metal detection, and encourage artists to target the new shader. Different texture stack, channels for spec power, index of refraction and detail texture modulation, etc. So this shader and the new one would be totally incompatible, textures-wise, and even uniforms-wise. This path removes the concern about people getting unexpected results with new models. New models would NOT target the metal detection shader. It would be against the law. I could come up with the new shader in a couple of days; I got most of the code already. So, even if there are no assets using them, people can start targetting it before version 25. In this case, I'd cancel the "psychic" shader project. Channels needed for a Good shader: Specular texture: rgba with 8-bit alpha specular red specular green specular blue specular_power (1 to infinity, encoded as fourth root of 1/spec_power)is_metal Diffuse texture: rgba with 1-bit alpha (rgb encoding <metals> / <non-metals>) diffuse red / purity_of_surface ((0.9 means 10% of surface is diffuse particles exposed, for plastics)) diffuse green / index_of_refraction (0~4 range) ((1~4, really, but reserve 0 for metals)) diffuse blue / detail_texture_modulator is_metal Normalmap: rgb(a) u v w optional height, for parallax Getting Blender to produce them should be no issue. Note that I've inverted the first and second textures, specular first, with the diffuse becoming optional... For metals, the first texture alone would suffice, as diffuse can be calculated from specular color in the shader. Artists who want to depict dirt or rust on the metal, they can provide the diffuse texture, of course; but in the absence of diffuse the shader would treat specular as metal color and auto the diffuse. Also, when providing diffuse texture for a metal, it could be understood to blend with autogenerated metallic diffuse; so you only need to paint a bit of rust here, a bit of green mold there, on a black background, and the shader will replace black with metal diffuse color.4 points

-

4 points

-

3 points

-

How? It's static. To me all of that is necessary to describe a material; I don't see where there is a "choice" to make. quick google: https://forums.unrealengine.com/development-discussion/rendering/14157-why-did-u4-use-roughness-metallic-vs-specular-glossiness basically what can be taken from there metal/roughness model: taken from Disney more intuitve saves two channels doesn't permit physically impossible materials1 point

-

The texture stack analysis begins... As I said to hyperion before, I analyze needs first, then look for how to fulfill them. Therefore, starting this analysis from looking at what Blender has to offer is anathema to my need to establish what we're looking for in the first place. Not that I will not look at what Blender, or any parties, have to offer; and not that I'm unwilling to compromise somewhat for the sake of pre-packaged convenience; but, to me, looking at what is available without analyzing what's needed first is a no-no. Let's start with the boring stuff: we have diffuse.rgb and specular.rgb. These two trinities MUST be mapped to rgb channels of textures in standard manner. Why? Because the parties that have come up with various compression and representation algorithms and formats know what they are doing; they have taken a good look at what is more or less important to color perception; so say a DDS texture typically has different numbers of bits assigned to the three channels for a reason. We certainly would not want to swap the green and blue channels and send red to the alpha channel, or any such horror. What I am unpleasantly and permanently surprised by is the lack of a texture format (unless I've missed it) where a texture is saved from high precision (float) RGB to compressed RGB normalized (scaled up in brightness) to make the most efficient use of available bits of precision, but packed together with the scaling factor needed by the shader to put it back down to the intended brightness. Maybe it is already done this way and I'm not aware of it? If not, shame on them! It is clear to me that despite so many years of gaming technology evolution, progress is as slow as molasses. Age of Dragons, I was just reading, use their own format for normalmaps, namely a dds file where the Z component is removed (recomputed on the fly), the U goes to the alpha channel, and V goes to all 3 RGB channels. Curiously, I had come up with that very idea, that exact same solution, 20 years ago, namely to try to get more bits of precision from DDS. What we decided back then, after looking at such options, was to give up on compression for normal maps; we ended up using a PNG. RGB for standard normalmap encoding, and alpha channel for height, if required. So, our texture pack was all compressed EXCEPT for normalmaps; and perhaps we could go that way too, or adopt Age of Dragons' solution, though the latter doesn't give you as much compression as you'd think, considering it only packs two channels, instead of up to four for the PNG solution. And you KNOW you can trust a PNG in terms of quality. In brief summary of non-metal requirements: We need Index of refraction and surface purity. Index of refraction is an optical quality that determines how much light bounces off a clear material surface (reflects), and how much of it enters (refracts), depending on the angle of incidence; and how much the angle changes when light refracts into the material. In typical rendering of paints and other "two layer" materials, you compute how much light reflects first; becomes your non-metallic specular; then you compute what happens to the rest of the light, the light refracting. It presumably meets a colored particle, becomes colored by the diffuse color of the layer underneath, then attempts to come out of the transparent medium again... BUT MAY bounce back in, be colored again, and make another run for it. A good shader models all this. Anyways, the Surface Purity would be 1.0 for high quality car paints, and as low as 0.5 for the dullest plastic. It tells the shader what percentage of the surface of the material is purely clear, glossy material, versus exposing pigment particles. In a plastic, pigments and the clear medium are not layered but rather mixed. ATTENTION ARTISTS, good stuff for you below... Another channel needed is the specular power channel, which is shared by metallic and non-metallic rendering, as it describes the roughness of a reflecting surface regardless of whether it is reflecting metallically or dielectrically. The name "specular power" might throw you off... It has nothing to do with horses, Watts, politics, or even light intensities; it simply refers to the core math formula used in Phong shading: cos(angle)^(sp), coded as pow( dot(half_vec,normal), specPower ).... dot(x,y) is a multiplication of two vectors ("dot product"), term by term across, with the results added up, which in the case of unit-vectors represents the cosine of the angle between them; normal is the normal to the surface (for the current pixel, actually interpolated by the vertex shader from nearby vertex normals); half_vec is the median between the incident light vector and the view vector... So, if the half-vector aligns well with the surface normal it means we have a well aligned reflection and the cosine of the angle is very close to one, which when raised to the 42nd power (multiplied by itself 42 times), or whatever the specular power of the material is, will still be close to one, and give you a bright reflection. With a low specular power (a very rough surface), you can play with the angle quite a bit and still see light reflected. If the surface is highly polished (high specular power), even a small deviation of the angle will cause the reflection intensity to drop, due to the high exponent (sharper reflections). Note however that the Phong shading algorithm has no basis in physics or optics. It's just a hack that looks good. For non-programmers, you may still be scratching your head about WHEN is all this math happening... It may surprise you to know it is done per-pixel. In fact, in my last shader, I have enough code to fill several pages of math, with several uses of the pow(x,y) function, and it is all done per-pixel rendered, per render-frame. The way modern GPU's meet the challenge is by having many complete shader units (a thousand of them is not unheard of) working in parallel, each assigned to one pixel at a time. if there are 3 million pixels on your screen, it can be covered by 1000 shader engines processing 3,000 pixels each. ... specially this: But back to the specular power channel and why it is so important. As I've mentioned elsewhere, the simplest way to represent a metal is with some color in specular and black in diffuse. However, you do that and it doesn't look like metal at all, and you wonder what's going on. Heck, you might even resort to taking photos of stainless steel sheets, and throwing them into the game, and it would only look good when looking at the metal statically; the moment you move, you realize it is a photograph. The look of metal is a dynamic behavior; not a static one. Walk by a sheet of stainless steel, looking at the blurry reflections off it as you walk slowly by it. What do you see? You see lots of variations in the reflectivity pattern; but NOT in the intensity. The intensity of reflectivity, the specular color of the metal, doesn't change much at all as you walk. What does change, then? What changes is small, subtle variations of surface roughness. And this is how you can represent EXACTLY that: Add even a very subtle, ultra low gain random scatter noise to the (50% gray, say) specular power of your model of stainless steel; now, as you walk by it in-game you will see those very subtle light shimmers you see when you walk by a sheet of stainless steel. In fact, it will look exactly like a sheet of stainless steel. You will see it in how reflected lights play on it. THAT IS THE POWER OF SPECULAR POWER !!! For another example, say you have a wooden table in a room that you have painstakingly modeled and texture, but it doesn't look photorealistic no matter... Well, take the texture you use to depict the wood grain, dim it, and throw it into the specular power channel. Now, when you are looking at a light reflecting off the table, the brighter areas in the specpower texture will also look brighter on reflection, but the image of the light will be smaller for them; while the darker zones of the wood fiber pattern in specpower will give a dimmer but wider image of the light. The overall effect is a crawling reflection that strongly brings out the fibers along the edges of light reflections, but not elsewhere, making the fibers look far more real than if you tried to slap a 4k by 4k normalmap on them, while using a single channel! For another example, say you have the same table, and you want to show a round mark from a glass or mug that once sat on that table. You draw a circle, fudge it, whatever; but where do you put it? In the diffuse texture it will look like a paint mark. In the specular texture it will look like either a silver deposition mark or like black ink. No matter what you do it looks "too much"... Well, try throwing it in the specpower channel... If your circle makes the channel brighter, it will look like a circle of water or oil on the table. If it makes the channel darker it will look like a sugar mark. Either way, you won't even see the mark on the table unless and until you see variations in the reflectivity of a light source, as you walk around the table, which is as it should be. THAT IS THE POWER OF SPECULAR POWER !!! So, having discussed its use, let me say that the packing of this channel in the texture pack is rather critical. Specular power can range from as low as 1.0 for an intentionally shimmery material, think Hollywood star dresses, to about a million to honestly represent the polish of a mirror. The minimum needed is 8 bits of precision. The good news is that it's not absolute precision critical; more a matter of ability to locally represent subtle variations. I'm not looking into ranges, linearities and representations for the texture channels yet, but I will just mention that the standard way to pack specular power leaves MUCH to be desired. Mapping specular powers from 1.0 to 256.0 as 0-255 char integers has been done, but it's not very useful. The difference between 6th and 7th power is HUGE. The difference between 249th and 250th power is unnoticeable. Furthermore, a difference between 1000th power and 1050th power is noticeable and useful, but a system limited to 256.0 power can't even represent it. As I've suggested elsewhere, I think a 1.0/sqrt(sqrt(specpower)) would work much better. But we'll discuss such subtleties later. Other channels needed: Alpha? Let me say something about alpha: Alpha blending is for the birds. Nothing in the real world has "alpha". You don't go to a hardware store or a glass shop and ask for "alpha of 0.7 material, please!" Glass has transparency that changes with view angle by Fresnel law. Only in fiction there's alpha, such as when people are teleporting, and not only does it not happen in real life, but in fact could never happen. For transparency, a "glass shader" (transparent materials shader) is what's needed. Furthermore, the right draw order for transparent materials and objects is from back to front, wheras the more efficient draw order for solid (opaque) materials is front to back; so you don't want to mix opaque and transparent in the same material object. If you have a house with glass windows, the windows should be separated from the house at render time, and segregated to transparent and opaque rendering stages respectively. All that to say I'm not a big fan of having an alpha channel, at all. However, there's something similar to alpha blending that involves no blending, called "alpha testing", typically uses a single bit alpha, and can be used during opaque rendering from front to back, as areas with alpha zero are not written to the buffer at all, no Z, nothing. This is perfect for making textures for plant leaf bunches, for example. And so I AM a big fan of having a one-bit alpha channel somewhere for use as a hole-maker; and I don't want to jump the gun here, but just to remind myself of the thought, it should be packed together with the diffuse RGB in the traditional way, rather that be thrown onto another texture, as there is plenty support in real time as well as off-line tools for standard RGBA bundling. There is another alpha channel presently being used, I think in the specular texture, that governs "self-lighting", which is a misleading term; it actually means a reflective to emissive control channel. I don't know what it is used for, but probably needs to stay there. Another "single bit alpha channel" candidate that's been discussed and pretty much agreed on is "metal/non-metal". I've no idea where it could go. Having this single bit channel helps save a ton of texture space by allowing us to re-use the specular texture for dielectric constant and purity. There is a third channel left over, however. Perhaps good for thickness. One thing we will NOT be able to do with this packing, using the metal/non-metal channel, is representing passivated oxide metals. Think of a modded Harley Davidson, with LOTS of chrome. If the exhaust pipe out of the cylinders, leading to the muffler/tail-pipe, is itself heavily chrome-plated, you will see something very peculiar happen to it. In areas close to the cylinder, the pipe will shine with rainbow color tinted reflections as you move around it. The reason for this is that the heat of the cylinder causes chrome to oxidize further and faster than cool chrome parts, forming a thicker layer of chromium oxide, which is a composite material with non-metal characteristics: transparent, having a dielectric constant. The angle at which light refracts into the oxide layer varies with angle AND with wavelength; so each color channel will follow different paths within the dielectric layer, and sometimes come back in phase, sometimes out of phase. I modeled this in a shader 20 years ago. Unfortunately, the diffuse texture plus dielectric layer model is good for paints, plastics, and many things; but modelling thick passivating rust requires specular plus dielectric. It would have been good for sun glints off swords giving rainbow tinted lense-flares... EDIT: There is another way we might actually be able to pull off the above trick, and that is, if instead of using the metal/non-metal channel to switch between using the second texture for specular or non-metallic channels, we use it to interpret the first texture as diffuse or as specular. The reason this is possible is that non-metals don't have a "specular color", and metals don't really have s diffuse color either... And you might ask, okay, how do I put rust on steel, then? Well, rust is a non-metal, optically... Now, alpha-blending rust on steel may be a bit of a problem with this scheme, though... But anyways, if we were to do this, we'd have our index of refraction channel regardless of whether the bottom layer is diffuse or metallic. This would allow us to show metals like chromium and aluminium, which rust transparently, it would allow us to have rainbow reflecting steels, and would even allow us to have a rust-proof lacker layer applied on top of bronze or copper. And actually, it is not entirely inconceivable, in that case, for the metal/non-metal channel to have a value between 0 and 1, thus effectively mixing two paradigms ... food for thought! ... in which case it could be thrown in the blue channel of the 2nd texture. For artists, this could boil down to a choice between simple representation of a diffuse color material, or a specular metal, or to represent both, and have a mix mask. Thus, you could represent red iron oxide by itself, diffuse, spec power, index of refraction, whatever; and another set of textures representing the clean metal, then provide a gray-scale texture with blotches of blackness and grey representing rust, over a white background representing clean metal, press a button and voila!, the resulting first texture alternates between rust diffuse and grey specular color, and the blue channel in the second texture is telling the shader "this red color is rust", and "that gray color is metal". It could look photo-realistic while using a minimum of channels to represent. I have to go, so I'll post this partial analysis for now and continue later. One other channel that immediately comes to mind is detail texture modulation. I think we are over the limit of what we can pack in 3 textures, already; I have a strong feeling this is going to be a 4 texture packing. That's "packing", though; at the artistic level there could be a dozen. Other texture channes for consideration are the RGB for a damage texture, to alpha-blend as a unit takes hits... blood dripping, etc.1 point

-

The guys are already packing things up for the release and it will be out real soon. I am taking note of this and will add this to my list for the next alpha development! Thanks!1 point

-

Thanks! I've already started with this. Got it working fine (after unhelpfully pestering you ). Ready for debugging and making changes. Thanks! I wrote this stuff almost a decade ago, but it's a decent example, even if I think there's plenty of stuff I would change now. Will start checking after I keep translating some more.1 point

-

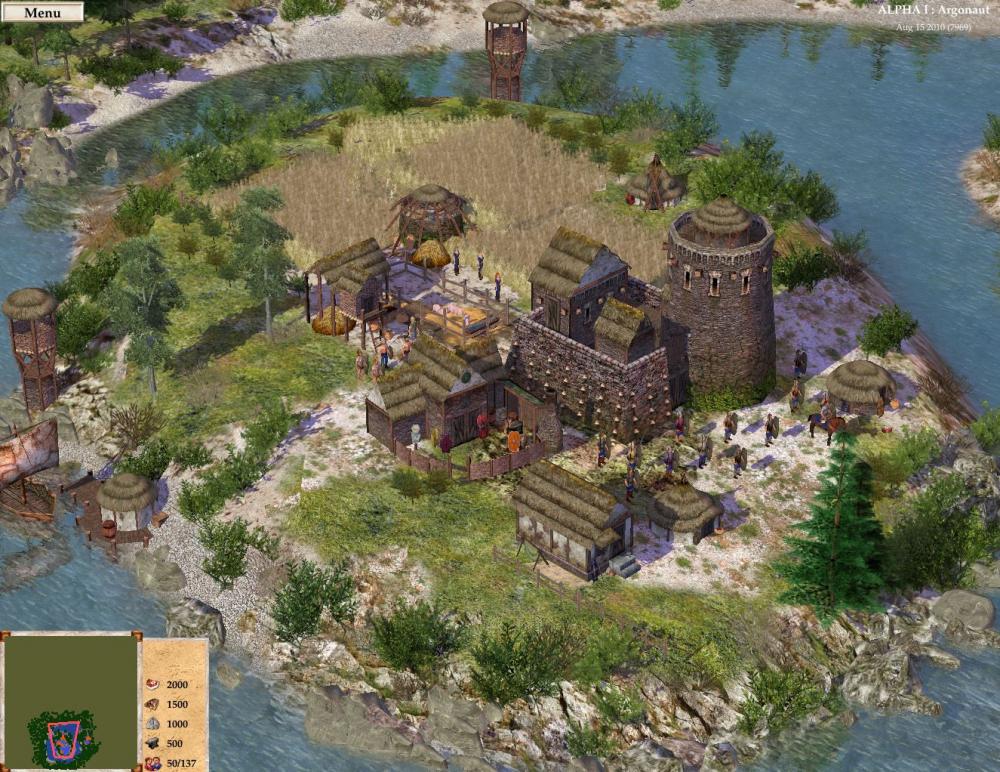

I have been following the development of this game closely for a while, I like this city-building style of the early 2000s with a Mesoamerican theme. Alias the developer keeps the game in development on github for Windows and MacOs. The Windows version runs on Ubuntu smoothly using Wine. https://github.com/Perspective-Games/Tlatoani_Releases/releases1 point

-

Hi @nifa, sorry for not replying earlier, I took some time off of 0 AD modding. Is it possible? Probably, but it is texture then, and not aura. Last time I checked, aura range always use player's color, means that it cannot support texture, only alpha (CMIIW). I don't have any expertise in 3D modeling and texturing, but I'm sure that pavement can be supported. Is it desirable? Personally, I want it ... Though I still don't know how. I'll start modding again after A24 releases, perhaps.1 point

-

1 point

-

Anyone ever played The Fertile Crescent? Graphics are not as flashy, but offers interesting take on mixing AoE and Stronghold mechanics. I like that citizens consume foods and can starve. It's under development but playable. It's also free. https://lincread.itch.io/the-fertile-crescent Also, is there any plan for 0 AD to be on itch.io?1 point

-

The patch has arrived. Roof brilliance artifact is about 90% removed. Patch diffed from the /binaries folder. I call it "prohibited" because the intent of this patch is to make the best of existing art assets, but is to be discouraged as a target shader for new art. EDIT: Rather than submitting a new patch to Phabricator, I updated my previous patch, D3555. https://code.wildfiregames.com/D35551 point

-

1 point

-

1 point

-

1 point

-

1 point

-

1 point

-

1 point

-

It's not a PhD paper, but it's something I never would have known without watching this. Hope you all like it!1 point

-

1 point

-

0 points