Yves

WFG Retired-

Posts

1.135 -

Joined

-

Last visited

-

Days Won

25

Everything posted by Yves

-

finished custom map! (first try)

Yves replied to jarnomodderkolk's topic in Scenario Design/Map making

I like how you have taken care to make the maps playable. Many people make the mistake that they just try to make good-looking maps, which are unfortunately not fun to play. Well done: Both players seem to have more or less even chances on your mapsThe maps are laid out so that the terrain contributes to gameplay (narrow gaps, potential locations for expansion etc.)Terrain textures fit well. You adjust them to altitude and environmentYou use different trees for different environment types (palm trees on the shore, conifers on the east side where the terrain looks more rocky and the terrain is less green, burnt trees close to the vulcano)Terrain elevation makes it interesting but still in a way that should not infer with gameplay negativelyI think it's by purpose that there's (apparently) not enough room for a civic center on the islands in the middle of the second map? That could be interesting because it requires players to gather resources using docks first, I like it!Possible improvements: Terrain texture tiling is very visible on some places (especailly the grassy part in the middle).Could probably use some more eyecandy bushes and things. Would also help to hide the texture tiling.The seams between textures are too visible.Keep up the good work! -

Multi Threading for 0 A.D. simulation and less lag

Yves replied to JuKu96's topic in General Discussion

I don't agree here. I have a Radeon R9 270, which is a relatively modern and fast graphics card. Stil, I get less than 20 FPS on some places of the map Deep Forest, even without any AI players and with just a couple of units. In the profiler, I often see 30-40 ms in the renderer. It's much better on Nvidia cards, but I'd still say it's something that we can improve and not solely because AMD's drivers suck. OpenGL has improved since our renderer was written and there are new extensions and approaches that are designed to reduce driver overhead. I've started working on such a new renderer, but it's definitely not a "quick win" and will still take a lot of time until we see the first improvements (and even more until the renderer takes full advanted of OpenGL 4+). -

That's a very nice feature!

-

One of your units was probably standing on the foundation. This sometimes happens if you have a lot of units standing on a spot and then you start building something on exactly that spot. All units will get to a spot around the foundation where they can build and when no more spots are left, they might block the path for those who are still on the building ground. That's just the foundation. The AI can place foundations anywhere, the same way you can do it. It should probably stop trying to build on the same spot if the foundation gets destroyed too many times (or wait with foundation placement until builders are closer).

-

Nice work!

- 6 replies

-

- rote

- Rise of the East

-

(and 3 more)

Tagged with:

-

Looking good! It didn't take long until someone started using the increased limit for terrain elevations (mountains).

-

It looks very nice, but probably a bit too much like a pet (very friendly, peaceful and shampooed less than an hour ago in the dog parlor ).

-

Wow, looks very natural! Only the tail looks a bit strange to me.

-

I also agree that it's hard to spot some objects in 0 A.D. sometimes. On the example image with the modified terrain texture, the improvement in that regard is clearly visible. However, I also agree with others that the modified texture looks less realistic and quite boring. How would you achieve more clearly visible units and objects without loosing visual quality? Can you show that in an example? I'm not an artist and it's a pity that none of our artists (team members) has been able to comment yet (they are away more or less at the moment).

-

We have discussed this topic in a meeting already before I've started the implementation and I've posted all the relevant information here: http://wildfiregames.com/forum/index.php?showtopic=19119 There was no no disagreement so far. To sum it up: The new autobuilder is up and running, but currently runs with a trial license, which will run out in 41 days. We need a Windows Server 2012 R2 Essentials license which costs $545.47 according to the link posted in the topic. I need the activation key for the license, then I can enable it on the existing server.

-

I'm not sure if displaying aura ranges as rings is the right way to do it. Especially when there are many overlapping rings (female citizen aura for example), it doesn't work well anymore. I imagined it more like an effect on the affected units, like a glow or something (but I still don't quite see how we would show multiple different types of auras for example).

-

GreyGoo does that by the way.

-

I'd say IntelliSense isn't quite as intelligent as the name implies. Microsoft is still far behind the other compilers when it comes to supporting C++11, so they seem to bother even less about fixing IntelliSense. There are tons of bug reports when you search the internet.

-

I did a quick review, but I'm not yet confident enough to commit it today. Not because of issues, but because I didn't have enough time to check everything carefully and it would be rushed a bit. That's a bit too close to feature freeze for me, but maybe someone else has time. The Void bot should be set to hidden in data.json to hide it from the selection for other maps. Check tutorial AI.Some functions could be moved to TriggerHelper.js when it's not a mod anymore.I'm not sure if the flag template is right in "structures". There seem to be only civ specific structures. "Other" seems to be better.As others said, we also need a way to handle scenarios that require a special match setup (players, AIs etc.). Anyway, this can be done later because it's a general problem and doesn't affect the other maps.

-

I've tested your scenario today and liked it a lot. Here's some feedback regarding the gameplay. I've played in the intermediate difficulty. First, I scouted the barbarian camp and thought it's quite small. I killed most of the soldiers there and then went back to build up the base. It took some time to realize that the waves of attackers are getting bigger and bigger and at some point I lost after killing around 200 enemies. In the second game (also intermediate difficulty) I killed all soldiers in the barbarian camp and destroyed it just with my initial group of 5 longswordsmen. I think it's not optimal that it's so easy to win when rushing early and so hard when trying to build up the base. My suggestion is that you reduce the number and/or frequency of units spawning and increase the camp size and the number of soldiers there. Maybe you could also spawn some reinforcements if you destroy certain buildings in the camp. Also it would be nice to make it more clear what you have to prepare for (increasing numbers of enemies spawning regularly).I didn't quite understand what purpose the question of the elder has (seeking to increase combat skills or wisdom). I could figure out by playing again or looking in the scripts but wouldn't it be better if the player knew what impact the decision has?

-

Awesome work as always, LordGood! And nice to see some activity from the art department again .

-

I'm glad people are experimenting and working with triggers. Also your work and the approach makes a very good impression. I hope I'll have some time for testing it soon. Keep up the good work!

-

I'm quite sure there must be something left from a previous build. I had a similar problem when some data from packaging was left in my working copy. Try a cleanup, "Revert all changes recursively" and "Delete unversioned files and folders, Delete ignored files and folders". http://tortoisesvn.net/docs/nightly/TortoiseSVN_en/tsvn-dug-cleanup.html All the visual studio project directories should be gone now if you've done the previous step correctly and you need to run update-workspaces.bat again.

-

turn 14 200endturn 15 200cmd 1 {"type":"walk","entities":[7079,7080],"x":620.8948974609375,"z":839.1896362304688,"queued":false}endturn 16 200endturn 17 200endYou see the commands in this file already like in the example above (here you see a move command for two units). The hashes are generated in multiplayer games and are a checksum of the game state or parts of it. You can't use the hashes for anything else than checking if they are equal. They are used to detect out of sync errors in multiplayer games for example. The AI doesn't send the commands like human players in case you were looking for that. Commands.txt is generated during the game in real-time, as well as the hashes.

-

Have you checked the commands.txt file that already gets created in each game? Look in the wiki in GameDataPaths to find the files. Is that what you need?

-

Hi Veronica Welcome to the forums and good luck with your master thesis! Have you seen our wiki documentation about localization here?

-

The Tracelogger shows the time spent running. The game is a 2vs2 AI game on the map Greek Acropolis (4) this time. The difficulty is really that the difference of running more in IonMonkey might have a bigger effect on other maps or when the game plays out differently. Part of of the decision is still guessing.

-

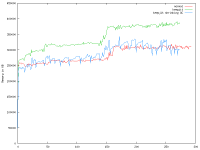

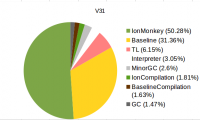

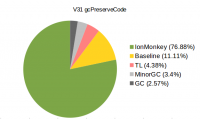

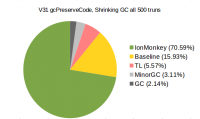

I've spent quite some time analyzing the effect of gcPreserveCode on memory usage and performance. In the previous measurements, we have seen a 13.5% performance improvement in the non-visual replay when gcPreserveCode is enabled. I've also checked memory usage there, but it was only the size of the JS heap reported by SpiderMonkey. If SpiderMonkey needs more memory with gcPreserveCode that is not part of the JS heap, we wouldn't have seen it there. So one of the first things to do is comparing total memory usage: Here you see a graph of the total memory usage during a non-visual replay of 15000 turns with preserving JIT code and without (both v31). The measurement in blue will be explained later. There's one sample all 5 seconds. As expected, the keepjit graph (green) is a bit shorter because it runs faster. In the end of the replay, keeping the JIT code needs 76 MB or 24-25% more memory. This is just the non-visual replay, so the percentage looks less scary with a real game where much more memory is needed for loading models, textures, sound etc. It's not only important what effect the gcPreserveCode setting has during a game, but also what effect it has when playing several games in a row. The JIT code is kept on the compartment level (might partially be zone level, but that's the same for this case in practice). We create a new compartment when we start a new game and destroy the compartment when we end it. This means we don't keep simulation JIT code for more than one game. The same is true for the NetServer in multiplayer games, for random maps and for GUI pages. Also we can run a shrinking GC at any time, which throws away all the JIT code and associated information. The third graph (in blue) shows an additional memory measurement when a shrinking GC is called all 500 turns and gcPreserveCode is enabled. It confirms that calling a shrinking GC really frees the additional memory used for JIT code and associated data. I would have expected it to have more or less the same negative effect on performance as the default settings, but actually it looks like the performance is as good as with keeping the JIT code for the whole game. It's a bit dangerous to conclude that from this single measurement though. I've not been especially careful about other programs runnings on the system and it's just one measurement. I've also worked on fixing some problems with the new tracelogger in v31. I've shown some tracelogging graphs in the past already. It not only gives information about how long JS functions run and how often they are called, but also engine internal information like in which optimization mode they run and how often they had to be recompiled. Among other information you can also see how long garbage collection and minor GCs took. The tracelogger wasn't really used for such large programs like 0 A.D. so far, so it had a few bugs in that regard (correct flushing of log files to disk for example). Some of them are fixed in even newer versions or scattered across different bugzilla bugs, waiting to be completed, reviewed and committed. Together with h4writer who helped us in the past already and who is the main developer of the tracelogger, I've managed to backport the most important fixes to v31. In addition, we discovered a limitation with large output files and he has made a new reduce script that can be used to reduce the size of the output files. Now that the new tracelogger is functional, it can be used to further analyze the effect of gcPreserveCode. The following diagrams are made from Tracelogger data. They show how long different code runs in the different levels of optimization. Code starts running in the interpreter, which is the slowest mode. After a function has run a few times in the interpreter, it starts to get compiled to baseline code (BaselineCompilation) and then runs in Baseline mode. The highest level of optimization is IonMonkey. TL stands for Tracelogger and can be ignored because it's only enabled when the Tracelogger is used. So basically the higher the IonMonkey part, the better. Interpreter should be close to 0%. I've added everything >=1% to the diagram and left the rest out. V31 (no gcPreserveCode, no shrinking GCs) V31 with gcPreserveCode v31 with gcPreserveCode and shrinking GCs all 500 turns The Tracelogger data matches the results from the performance measurement. Using gcPreserveCode without a shrinking GC obviously gives better results than with shrinking GC from the Tracelogger perspective. The difference is quite small though and probably doesn't justify the increased memory usage. Also the memory graphs indicate that there's probably not a big difference between these two modes (but this would need to be confirmed with additional performance measurements). The Tracelogger results from the default mode without gcPreserveCode are much worse (as expected). The code runs much less in IonMonkey, more in Baseline and even quite a bit in interpreter mode. The code gets garbage collected and throw away way too often and thus has to be recompiled more often, resulting in more compilation overhead and more time running in less optimized form. Conclusion My conclusion based on these results is that using gcPreserveCode together with a regular shrinking GC seems to be a good compromise between memory usage and performance. It might be worth to confirm that the shrinking GCs really don't have a significant (negative) effect on performance with additional measurements.

-

I think it means all code in one compartment (simulation OR gui OR AI OR ...) because js::NotifyAnimationActivity works on the compartment level. It will not enter a loop and as far as I know, JIT code GC only happens as part of the normal full GC. We define some rules to trigger full GC which depend on how much the memory usage increased since the last full GC. However, there are other conditions that could trigger a full GC, so it would not be enough to add a js::NotifyAnimationActivity call right before we call the full GC. I assume it means JIT code GC will only happen if the last call to js::NotifyAnimationActivity was longer than a second ago at the time the full GC runs. Anyway, it seem to be a hack to use js::NotifyAnimationActivity to prevent JIT code GC during such a long period of time.

-

It's not too bad in this specific test, I'm more concerned about the support of SpiderMonkey. If there's no solution that is meant for our case, it could suddenly break without an alternative. So far I know about the following ways to prevent GC of JIT code: Set the flag "alwaysPreserveCode" in vm/Runtime.cpp (tested here) Use the testing functions to enable gcPreserveCode Keep calling js::NotifyAnimationActivity with a delay of less than a secondThe first approach has the problem that it completely disables JIT GC for the whole engine and the source code needs to be changed. This solution doesn't work for SpiderMonkey versions provided by Linux distributions for example. The second approach did not work in my most recent tests, but if I investigate further, it probably could work. It would not require changes to the library source code, but otherwise has the same problems and is obviously declared as a testing function that shouldn't be used in productive code. The third option seems to be actually used by some parts of Firefox, but it seems very fragile. GC of JIT code could happen again if there's only a single lag spike of more than a second, or another case when our calls don't get executed with less than a second delay. I really hope the SpiderMonkey devs have a better alternative to offer.