Yves

WFG Retired-

Posts

1.135 -

Joined

-

Last visited

-

Days Won

25

Everything posted by Yves

-

I got a lot of these errors when running: ./pyrogenesis -quickstart -autostart="The Massacre of Delphi" -autostart-ai=1:aegis -autostart-ai=2:aegis

-

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

You have some good points. I plan to work on an integration branch after V24 is committed and update this branch to the current development version. This should take care of these points and also helps staying informed about changes in the API: The following issues remain, but I think that's acceptable. We have seen in the profiling above that most of the performance issues are fixed with newer versions. -

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

In my opinion it's completely unacceptable to have such a big part of the game as the scripting engine frozen and tied to one specific version. Any kind of issue we face will require more or less ugly workarounds or forking of SpiderMonkey 1.8.5. Developing a Javascript Engine is not what we want and forking will be a painful, pointless and stuck approach. We won't be able to use new features of the Javascript language, we won't get bugs fixes for the cloning problem, the OOS problems or other problems we currently solve with workarounds or face in the future. Especially now that we are so much closer to the upgrade (my WIP patch is now only about 4000 lines long, compared to more than 24 000 lines a few months ago), stopping the upgrade would be complete nonsense. I think AI threading would work without the upgrade. GC could be improved in the future but we will only really know that when/if it happens. I just found this link. There's a short descriptions of what GGC (Generational Garbage Collection) should improve. -

I'm sorry, but I'd need too much time to figure that out myself first. That's a very exotic setup!

-

Why do you experiment with ARP spoofing? The only legitimate reason I see for this is educational (figuring out how it works). In this case you'd want to figure it out yourself or ask more specific questions.

-

Open the web interface of the router by typing its IP address into the address bar of your webbrowser (I think it should be 192.168.1.1). Login (The default user should be admin and the default password 1234, but I hope you have changed that ) Click on Advanced Setup, NAT, Virtual Servers Select "custom Server" and enter 20595 for External Port start, External port end, Internal port start and internal port end and select UDP as protocol As Server IP Address you need to enter your computer's IP address. That should be something like 192.168.1.x. On Windows open a CMD window and type ipconfig to get your IP address. Save

-

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

Yes, it stands for extended support release. There are no standalone packages for SpiderMonkey provided for other versions, but they can be created manually relatively easy. I did the "backport" of my patch in less than a day, so the API difference between ESR24 and the later dev version I was using (something around v26) was relatively small. We don't know if there will be bigger API changes until ESR31 is ready though. Maybe they will force exact stack rooting which will probably require quite a lot of work to get it right. The biggest problem I see with ESR31 is the additional work required for keeping the patch in sync. Your commit yesterday introduced some missing vars in for .. in loops for example. You would have gotten error messages if the update was done already. I don't think the work would be massive but it should be considered. I don't know about any interesting new features. I haven't seen a public roadmap with planned features either. I prefer updating to ESR24 first and leper favoured that approach too. Any other opinions? -

That's because there's a typo in "commads.txt".

-

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

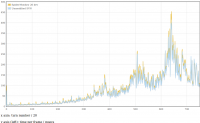

I have updated/downgraded the patch for version ESR24. Here's a comparison of the different versions. The keepjitcode profiles are with a flag set in JSRuntime.cpp which disables garbage collection of JIT code. It improves performance but can't be used that way at the current state. I've attached the profile files because on a screenshot you can't see much. You can open graph.html in a browser, zoom with the mouse wheel and drag the graph with the mouse. You can also edit data.js to hide some graphs. What's your opinion? Should we update to v24 now which is an official ESR release and gets packaged for most Linux distributions? Should we use a non-ESR version which is faster but not officially released as standalone library and doesn't get packaged by most Linux distributions (or if they package it, then only for us). Or, the third option, should we/I continue updating the patch until the ESR 31 version gets released (around July 2014)? profile version decision.zip -

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

I'm unsure what you mean. The SpiderMonkey devs know about these results and also gave some tips what could improve performance. They don't seem to judge the results as so unexpected that they'd spend much time doing their own analysis though. To be fair, I believe it when they say that there were reports of performance improvements for other applications than the most well known benchmarks. I also measured performance improvements for random map generation for example. My guess is that the improvements work well for simple code with a lot of repetition. If you have one function that runs 80% of the time and repeats the same steps over and over again and only works with simple and static objects, this is likely to run faster. If there's more complicated code working on more complicated objects with less of repetition, the concept doesn't work out and the performance doesn't improve. Especially our entities and entity collections are quite complex, generic and polymorphic objects that can't be put in a compact array or optimized well in another way. That should be one of the next subjects to improve on our side because improving it on the JS engine side is much more difficult and also more limited. It definitely makes it easier. But there are also additional changes comping soon like dropping support for stack scanning which means a lot of work will have to be put into rooting all jsvals and objects properly. -

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

I guess we are now finally at the point where we can really draw a conclusion about SpiderMonkey performance. After seeing the big improvements from moving the AI to one global in the previous profile, I hoped again that SpiderMonkey would raise from the ashes like a Phoenix(or maybe like a burning fox?) and impress us with some nice performance improvements. The bad news is, it doesn't. The good news is, all of the big performance drawbacks we saw in the previous profiles are gone too and there are only a few smaller peaks left that shouldn't stop us from doing the upgrade. The fact that both graphs are so close together also seems to prove that hidden performance problems affecting the measurement are quite unlikely. I'm disappointed that this is the result of highly talented experts working for about two and a half year on Javascript performance improvements but it also tells me that this way of improving peformance is a lost cause. Here are the graphs: The hash in the end isn't the same but since both graphs are so similar, it's extremely unlikely that the simulation state ends up significantly different. The setup is the same as in the last graph. Now the next steps are: Figuring out if there are significant problems that keep us from using the packaged ESR24 version and then decide which version should be used for the upgrade Fix the remaining problems and bring the patch to a committable state -

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

I've written something about scripting in 0 A.D. here. Maybe it answers your question. If he wants to help with 0 A.D., he's welcome. But when he is working on his own project, he probably prefers working that and doesn't have time for other things. Anyway, for me it doesn't look like he uses Javascript or even SpiderMonkey in his project. Some exchange with other SpiderMonkey embedders would be interesting. -

I'm trying to get the SpiderMonkey upgrade ready for Alpha 16, but I can't promise anything. In this case it would be ESR24.

-

These sketches look awesome! I'm looking forward to seeing them with some color and lighting.

-

I think he looks too friendly, but I'm not sure what it is exactly. A few ideas: There's very little contrast. I only see darker spots near the shield and in the background. Especially his skin looks quite pale and a bit boring. there's not much expression in the face, especially in the smaller version as it will be seen in game.The pose is more defensive and looks less "confident" or "heroic" compared to other portraits.You probably know about LordGood's thread with unit portraits. Maybe you find some additional inspiration there (the portraits get better quickly as the thread progresses).

-

Each computer has a local address inside your network (usually something like 192.168.1.x). When you use one router for internet access with these two computers, both use the router's external IP-address for communicating with servers in the internet or with other players in the internet. So when someone tries to connect to your IP address from the internet, that's the router's external address. Because there are multiple computers on your LAN, the router doesn't know to which one it should forward the packet and the connection will fail. Port-forwarding specifies that. You need to forward it to the local IP address of the computer that should host the game. On Windows you can open a command prompt (cmd.exe) and type "ipconfig". This should print your local IP-Address and some other information. On Linux or Mac the command is "ifconfig". If only these two people should play together (without other players from the internet), you should open a game using the "Host Game" button directly from the main menu. The other player should then use the "Join Game" button from the main menu and enter your local IP address (the one you got with the ipconfig command). I'm not sure if it works from the lobby and if it works together with other players from the internet.

-

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

Ticket #2322 is committed. The next big step is getting #2241 in. After that I can update the SpiderMonkey patch again and test the performance. But first I need help with testing and reviewing of #2241. It's a huge patch and a much more complicated one than the last one. Unfortunately I couldn't split it up more. -

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

Yay, good news! Since the last post in this thread I've been working on integrating as many changes from the patch into svn as possible. When working on ticket #2241 I noticed that the changes there brought more or less the same performance problems the whole patch causes. I suspected the wrapping again and started to look for alternatives. In a discussion on #jsapi, Boris (bz) suggested using the module pattern to achieve a similar separation we currently get by using different global objects. That was more or less just a keyword for me in the beginning, but after some experimenting I got it working in ticket #2322. Today I've combined all parts of the puzzle and made the measurements. The results are exactly as I hoped and (kind of) expected! I've measured four different versions. The unmodified svn version at revision r14386 The patch from ticket 2241 applied to r14386 The patch from ticket 2322 applied to r14386 The patches from ticket 2241 and 2322 combined. Also based on r14386.All these versions use SpiderMonkey 1.8.5. It's a non-visual replay on the map Oasis 04 with 4 aegis bots. It runs about 15'000 simulation turns and (most importantly) all four replays ended with the same simulation state hash. What you can see on this graph: The second measurement (2241) suffers from significant performance problems due to the wrapping.The patch that moves the AI to one global (2322) doesn't change much compared to the unmodified SVN version. This is expected because our current SVN version can use multiple global in one compartment to avoid wrapping overhead. The theoretical benefits from loading the scripts only once and analyzing them more efficiently with the JIT compiler aren't significant. I expect this to be more important for the new SpiderMonkey version because its JIT compiler is more heavy-weight. On the other hand the JIT compiler is still designed to cause little overhead, so it's probably still not significant.When both patches are combined, we can avoid wrapping overhead and achieve about the same result as our current SVN version.Because my measurements for the new SpiderMonkey also contained this wrapping overhead, it's now quite open again what performance benefits it will bring. Well, we can say that it won't make much of a difference in the first 4000 turns or so, but that's where it only starts getting interesting! -

I think using only individual sounds and not a generic "battle loop" gives a better result in the end and should be the way to go. I would prefer that approach because context sensitive sound in battles make them much more epic than a generic battle track. You should hear it when a horde of elephants charges into battle and you should hear less sword "clang's" when only two or three units with swords are in battle but much more arrow "wuuush's" when you have a big battalion of archers. With generic battle sounds played in loops it will also require a lot of care to make it at least a bit context sensitive but we will always be limited at a certain level. To make the sounds more rich, we need more variation per type of sound like "sword clang" and also more types and more events when they are triggered. A good result is only possible when the implementation and the sounds work well together. I hope Steven (stwf) comes back, he could help with the implementation side. EDIT: I've sent a PM to Steven and told him about this thread.

-

No, why? Let's call these 200 ms - TimeOfComponentsUpdate the "update threshold". As long as the AI update takes less than the update threshold, this design gets the maximum out of the parallelism. If the AI update takes longer, it still saves us as much time as the update threshold for each AI update. You are right though that it does not allow the AI to run only every 10th turn for example but for more than 200 ms without blocking the sim update. But if we wanted to allow sim updates between AI updates we would need to run the same number of sim updates between the AI calculation on all machines in multiplayer games because otherwise it would cause OOS issues. That could be feasible but I don't like the fact that the AI bases its decisions on outdated data. It would have to cope with the fact that all its commands sent to the simulation could be invalid and ignored. That doesn't only affect the specific case of units dying or entities being destroyed it could be a lot more. Also keep in mind that we also have users with single-core machines. How long do you think an AI update could take to allow relatively fluent gameplay on such machines and how often is an AI update required? The AI needs to react to attacks, so I doubt the time between updates can be longer than 2 or 3 seconds anyway. It's difficult to estimate how big the impact on a single core machine (but with time based multiplexing for "fake-parallelism") would be if we have an AI update taking half a second that runs every two seconds. Yes, it's not optimal. Do you have any ideas to improve that? One way would be reducing the amount of data passed to the AI, which is desirable anyway. A lot of that terrain analysis data will hopefully become obsolete in the future when we get a proper pathfinding interface (which is not the data you're talking about though).

-

That's a good question, but it should be covered with this design. Basically the "UpdateComponents" part is where "the world is advancing" as you call it. At this stage the AI proxy listens to all important events and saves the changes (like units dying) in the AI Interface component. When the AI starts its calculation it has an up-to-date representation of the world. While it updates there are only visual updates happening on the screen like animations playing or parts of the UI updating that aren't tied to data from the simulation. The simulation interpolation makes everything look less choppy and more fluent but it's also purely visual. The next simulation turn (where the world really changes) will have to wait until all AI calculations are done to avoid this and other problems. There actually shouldn't be any difference in that regard compared to the single threading. At the moment the simulation runs about every 200ms in singleplayer games. This means that with this multi-threading design the AI won't delay the simulation update as long as it doesn't need more than 200 ms minus the duration of the components update (assuming it can run in parallel completely, not like on a single core CPU).

-

I think that problem should be solved in Alpha 15: http://trac.wildfiregames.com/ticket/2110

-

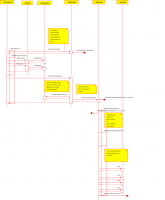

I'm currently thinking about the AI interface design again. An essential aspect to consider is how SpiderMonkey reacts to different ways of sharing data and what constraints are imposed for multi-threading. Currently it looks like it has significant performance problems with cross compartment wrappers to the sharedAI data. For performance in a single-threaded environment it's best to stay within one compartment and one global object to avoid accessing a lot of data through wrappers. On the other hand, having multiple players in one global object most certainly means they can't run in different threads. I tend to think that we won't have each player in a thread because the overhead of passing data around would be too big. Maybe we could have one sharedAI object per 4 players for example. This way we could have two threads for the AI player calculations in the extreme case of a match with 5+ AI players and the overhead still wouldn't be too big (hopefully). I've started with an UML diagram to show how I think the AI is meant to work with multi-threading but without this 4 players split. So essentially it just runs the AI calculations off-thread as a whole in the "gap" between simulation updates, but it doesn't have an own thread for each AI player. It's probably not perfectly valid UML and if you find any obvious errors (content or UML syntax) please tell me. I should have some more time this Thursday to look over the diagram and improve it. I'm currently in a discussion with SpiderMonkey developers and I've also created this diagram to show and explain how our interface works.

-

Mythos_Ruler's Playlist

Yves replied to Mythos_Ruler's topic in Introductions & Off-Topic Discussion

I like the parliaments (knew them already of course ) Some more funk! -

Only the lobby will use the new user interface in Alpha 15. Check the latest development report for a screenshot.