Yves

WFG Retired-

Posts

1.135 -

Joined

-

Last visited

-

Days Won

25

Everything posted by Yves

-

Mythos_Ruler's Playlist

Yves replied to Mythos_Ruler's topic in Introductions & Off-Topic Discussion

-

There are these videos here, but they are quite basic: http://www.youtube.com/playlist?list=PLhfyO7XlavO38mj4x9W3KzjKHwTMZJUNg

-

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

The upgrade is quite close to completion now! I've fixed serialization issues, compiler warnings, tests, replaced the SpiderMonkey usage in Atlas (#2434), cleaned up the patch and fixes other smaller issues. Now I need someone with a Mac to set up the build script for Mac OS X and test the build process there (#2442). In the meantime I'll test the build on Windows, have another close look through the whole patch and then call it v1.0 and consider it ready for the final review. I'd like to be ready for review in about a week and done with the review as soon as possible after that to have enough testing before the next alpha. -

I don't know that part of the code well. Could you check if the driver is installed correctly and if installing the old driver again fixes the problem?

-

Did it work before? If it worked, it would help a lot to know what caused it. Have you changed your monitor setup, installed different drivers, did it work in an earlier revision of 0 A.D. etc.

-

I suggest you consider using the current development version for AI development instead of the latest alpha version. Check this guide for information how to get it on Windows: http://trac.wildfiregames.com/wiki/TortoiseSVN_Guide You don't necessarily have to compile the code yourself because prebuilt executables are included in the repository. Members with commit access can trigger an autobuild. We do that regularly to make it easier for the artists to keep up-to-date without having to compile.

-

Watching people playing 0 A.D. isn't very interesting for me. The videos need some commentary to be entertaining in my opinion. It just lacks personality and uniqueness without any audio at all.

-

wxWidgets conversion to current Visual C++ format fails

Yves replied to FeXoR's topic in Bug reports

I found this thread when trying to build wxwidgets with VS2010. It worked this way, but I had to add an additional option: nmake -f makefile.vc UNICODE=1 USE_OPENGL=1 BUILD="release" -

Mythos_Ruler's Playlist

Yves replied to Mythos_Ruler's topic in Introductions & Off-Topic Discussion

-

[Beginner] Bloodshed Dev C++ with this ok?

Yves replied to pogisanpolo's topic in Game Development & Technical Discussion

Hi and welcome! I have never used Dev C++. One problem will be that we currently don't create project files for this IDE. Do you know if it can import visual studio projects? We use premake to generate the workspace/project files and it would be quite tedious to add and remove all source files and set all compiler and linker flags manually. Code::Blocks is the only cross platform IDE we create projects files for (well makefiles can probably be used with mingw). I'm using Code::Blocks on Linux and unfortunately it's a bit buggy but it works more or less. Codelite is also supported by premake but we don't generate project files for it. It's likely that our premake scripts and maybe premake itself needs some adjustments to work properly with Codelite. I haven't tested Codelite yet, so I don't know how good it is. -

Convert existing circle map to square map?

Yves replied to Leroy30's topic in Scenario Design/Map making

I think there were some discussions about completely removing square maps. A bad thing about them is how ugly they look on the minimap with all the black borders. I don't remember if there were other reasons to remove them. -

IMO it would make sense to reuse as much as possible from the multiplayer lobby which is currently internet only (I'll call it internet lobby). Most of the features from the internet lobby are also needed or nice to have for the LAN lobby (chat, map preview etc.). Reusing avoids duplication and helps achieving a more coherent user experience. I'm not saying it's trivial though because the internet lobby wasn't designed with that in mind as far as I know. Some important differences: You don't need a registered online account for the LAN lobbyThe rating stuff and probably most of the spam and cheat prevention stuff isn't needed for the LAN lobbyHosting, Joining and listing available games works different technicallyICMP chat without the lobby server won't work for the LANThe use cases that need to be covered are: Host unlisted LAN/Internet game directlyHost listed LAN gameHost listed Internet gameJoin LAN/Internet game with IPJoin listed LAN game with LAN lobbyJoin listed Internet game with Internet LobbyKeeping the direct hosting and joining is necessary IMO. LAN discovery won't work accross subnets for example and you could want to play with players in multiple subnets but still in a LAN setup. I'm not completely sure about hosting. Direct hosting does not need any network discovery functionality (either LAN or Internet), so it could be nice to have in some setups. Maybe it could even reuse the internet lobby and add LAN games to the same list with some filter functionality (checkbox LAN, checkbox Internet). This would need changes to the internet lobby logon though. It could have a LAN-Only mode where it does not display internet games and does not need a registered account for the login. There could also be checkboxes for "internet listed and LAN listed" for the hosting. Checking none of them would be the same as the "Host game" option from the main menu (use case "Host unlisted LAN/Internet game directly").

-

No problem, keep it up and I hope it's playable at least for 1vs1 games or maybe without AI players. Looking forward to seeing how it looks when it's finished!

-

It looks awesome! I fear that the map won't be playable for technical reasons at the current state of the engine. What about making a smaller map first? Think about a nice theme, try to make it work well from a gameply perspective and make it as beautiful as on those screenshots. Wouldn't it be more fun and more motivating to get something playable into the game quickly than working on a big piece of art that won't be actually playable for a long time?

-

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

I just noticed that I haven't answered to this yet. Spending too much time fine-tuning the GC doesn't make sense at the moment in my opinion. Maybe there's some more API functionality in the next SpiderMonkey versions or we change our code to produce less or (hopefully not) more garbage. Btw. I've attached the current WIP patch with incremental GC to ticket #1886. -

It's the only sensible way I know about and I think it's the right way. I don't know the network code and can't say anything about the implementation at the moment.

-

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

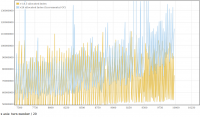

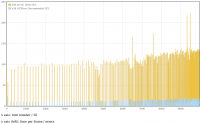

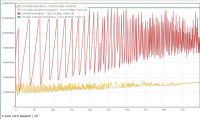

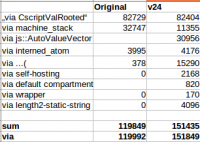

I've had a look at incremental GC. Incremental GC allows splitting up the garbage collection into slices and JS code can run between these slices. It doesn't make garbage collection faster in general, but it should avoid big lag spikes when too much garbage collection happens at once. SpiderMonkey uses a Mark-and-Sweep garbage collector. First, it marks garbage in slices (that's the incremental part), then it sweeps everything in another thread. It was quite a challenge and I wouldn't have a working implementation yet if terrence didn't help me on the JSAPI channel. He also opened a bug to add some more documentation. It looks like it's not yet figured out how API users are meant to configure incremental GC because Firefox accesses it quite directly. Anyway, I implemented a quite simple logic. If no GC cycle (the whole GC with multiple slices) is running and if the JS heap size has grown more than 20 MB since the last GC, we start a new incremental GC.We run the incremental GC in slices of 10ms each turn until it's done.The numbers can be tweaked of course. The part of measuring the heap size since the last GC isn't quite accurate though. What it actually does is setting the last heap size to the current heap size if the current heap size is smaller. That's because I didn't find a way to detect when the sweeping in the background thread has finished. So after the marking has completed, the heap is shrinking because the sweeping is running in the background. Here is a graph showing GC performance. I had to change the profiler to print the exact values each turn instead of printing an average over several turns. I've also changed v1.8.5 to run GC every 50 turns because I had to use only one runtime for measuring and the current settings wouldn't have worked. What we are measuring here are the peaks. It's quite a good result IMO, but it's hardly visible on the graph with total performance and with average values (because GC only runs from time to time). Btw. It's not a fault, this time v24 is actually better The next graphs show memory usage. V24 seems to use a bit more , but most of that is probably related to the GC behaviour. The peaks in the end could be because of the way I'm calculation the "last heap size" and because it takes several turns to mark the garbage before sweeping kicks in and actually frees it. Memory overview: Memory zoomed: -

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

Some of these questions are answered in the link I've posted to #2370. In my opinion we have to try some different approaches and compare the results. Maps are one thing that could help, but we should only use them if they prove to be the best approach or at least a sufficiently good approach (which the current implementation of entity collections probably isn't). -

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

It's a special object described here. I've also written a few words about one way of improving AI performance in ticket #2370. -

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

I assume this question is related to the SpiderMonkey upgrade. The current status is that the upgrade will make the performance a little bit worse for v24. Newer versions (I've tested v26) bring the performance closer to what we had with v1.8.5. and maybe beyond that at some point. Some new features of SpiderMonkey 24 (like Javascript Maps for example) could probably help to improve performance. The memory usage problem can be solved by correctly configuring the garbage collection. With the incremental GC it should also be possible to split up the time required for garbage collection more evenly and avoid big lag spikes. I have spent the whole day yesterday but I haven't yet completely figured out how GC needs to be configured. There are tons of flags to set and different functions to call and the documentation lacks. -

One good thing about the current farm fields is that if you place multiple connected fields, it looks like one big acre. I think the best would be if it's possible to keep that and still add more variation. Maybe not using different sorts of plants but variations of the same plant. The sorts of plants could be civ-specific. The problem of the other props is that they need to make sense visually no matter if the field is surrounded by other fields or if it's a single field or a field on the side.

-

I got many different errors with this one, but interestinglog.html is already overwritten. commands.txt

-

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

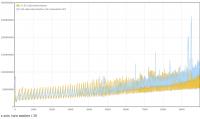

At the moment I'm trying to figure out if what we see on this graph is normal or not. I've reduced the number of GCs a bit because each GC call causes a more noticeable delay than with v1.8.5 (there are many flags to tweak GC though and I haven't tested them much yet). This graph compares JS memory usage of v1.8.5 and v26 during a 2vs2 AI game. V24 looks more or less like V26, so I didn't add that graph here. The used memory gets much higher because I've reduced the amount of GCs. More interesting than the peaks are the lowest parts of the graphs (after a garbage collection). I'd say that would be the amount of memory which is actually in use without counting memory that could be freed by a GC. It looks like V26 uses about twice as much memory as v1.8.5. It could be explained by the additional information stored by type inference and the JIT compiler. I don't know if such a big difference is normal for that. EDIT: I've dumped the JS heap on turn 1500 and ran a few grep commands to compare where the additional data in memory is coming from. I' don't know yet what it means... -

Awesome portrait again with beautiful lighting and a very dynamic pose. I agree about the background and the "less is more" thing. In the larger version I think his right hand looks a bit strange and some parts of the silhouette look like they're cut out (because you used the eraser).

-

[Discussion] Spidermonkey upgrade

Yves replied to Yves's topic in Game Development & Technical Discussion

Being closer to the upgrade means there's less work left to do and the risk of unacceptable problems showing up is reduced. Although staying on version 1.8.5 is probably not too bad at the moment, it will become a big show stopper if we later figure out there's no way around upgrading. I think it's quite likely that we will be forced to upgrade sooner or later. If we base fundamental design decisions on v1.8.5, the work required for upgrading will increase the longer we wait. If we upgrade now, there's still the chance that SpiderMonkey will change in a way that requires big architecture changes. That's bad, but keeping track of what's happening there and maybe having a chance to influence it is still much better than continuing using v1.8.5 without having any idea of what's changing in future versions. That could help us avoiding some pitfalls. Unfortunately it's not as easy as quickly reading some changelogs with each SpiderMonkey release. I'm quite sure we wouldn't know about the changed relation between compartments and global objects if I didn't work on the upgrade. I've tried raising the SpiderMonkey developers' awareness about library embedders. It won't stop them making big changes if they think it's necessary for Firefox, but I hope they will try to make it as easy as possible for us to prepare for it. I really see some change there. For example they have started to provide supported standalone SpiderMonkey versions on a regular schedule. Also they expect the API to become more stable soon. I'm not completely convinced about this but I still thought it's the most acceptable solution to stick with SpiderMonkey and try to keep our version as close to the development version as possible (by upgrading SVN to ESR releases and keeping a dev-branch and syncing it about once every week). I partially agree here. I don't believe in promises of theoretical performance improvements anymore. On the other hand I expect the GC performance to be much more universal and less dependent on the scripts than JIT compiler performance. I think we currently have enough bigger problems than GC performance to work on. On the other hand we won't be able to completely avoid GC hiccups in the main thread with v1.8.5. Wraitii has some good points too IMO. For me the main argument is really avoiding to be stuck with an old and "frozen" version without preparing our engine for future changes.