phosit

WFG Programming Team-

Posts

240 -

Joined

-

Days Won

4

Everything posted by phosit

-

Good to know. Thanks for testing.

-

I uploaded a fix for fmt 10 can you try with this patch applied.

-

Did you use a mod?

-

Vulkan - new graphics API

phosit replied to vladislavbelov's topic in Game Development & Technical Discussion

Was there a error message when it crashed? Can you upload the logs from the old pc? -

Did you enable vulkan without the spir-v shader mod?

-

We should make the network-error messages more detiled. e.g. Detecting that a ISP does block UDP should be possible.

-

Thanks sternstaup IMO that's a great idea. A simple use case would be to send the userreport over GNUnet to the server. So that the server can't tell from that IP the report is comming. Idealy we use GNUnet in-game to hide the address to the host. Generaly anonymisation-networks like tor have bad latency but GNUnet seems to have a replacement for udp i don't know how that works. First we should encrypt the existing infrastructure and look at QUIC. GNUnet isn't "production-ready" but we are also in alpha

-

Do i get you right: When you order a unit to do something the voice line and a timer is started. As long as the timer is not reset no other sound-track will be played. What do you mean by reset? does some process have to reset the clock, or does the reset happen when the timer reaches zero. I don't know how it's implemented. It would be nice that the voice line sets the unit to mouth-idle when the voice line ends. There would be no timer required.

-

Message System / Simulation2

phosit replied to phosit's topic in Game Development & Technical Discussion

What do you (all) think about adding a layer between the simulation and the renderer. That layer would use the data from the simulation to calculates the data which are not syncronized (corpses, interpolation, sound...). The renderer and the audio-system den does use thous data. In other words: Splitting the simulation in a global and a local part. That will be simpler to implement when there is a clear boundrary between simulation and renderer. -

Manifest against threading

phosit replied to phosit's topic in Game Development & Technical Discussion

It is implementable (i think). It could be a hidden setting (only in the user.cfg). @Stan` also mentioned make the syncronize per turn ratio variable for a28 or so. -

Manifest against threading

phosit replied to phosit's topic in Game Development & Technical Discussion

Did you profile it? https://trac.wildfiregames.com/wiki/EngineProfiling#Profiler2 I experienced the same thing: https://code.wildfiregames.com/D4786 No. It is costly to copy JS-objects (the simulation components) to an other thread. JS cant reference data on an other thread. There has to be some kind of OOS check. It isn't done every turn. It's done that way: only commands and sometimes a hash of the simulation-state is shared. -

-

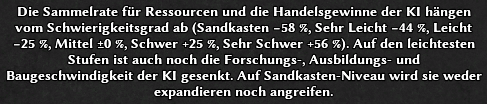

Hallo Newbee Nur um sicher zu gehen: Bist du in "Einzelspieler" > "Kämpfe" dort auf das Zahnrad gedrückt und die KI auf "Sandkasten" gestellt?

-

This will encourage to play with more units. Which then does decrease performance. Would it be possible to make units on the walls stronger instead? more resistance and propably more damage.

-

IMO a defensive AI should realy build walls if the terrain allows it. If no top player's do build walls, walls should be made stronger (or siege and ellephants weaker). Walls are a part of the game.

-

Vulkan - new graphics API

phosit replied to vladislavbelov's topic in Game Development & Technical Discussion

You can disable "Filter compatible replays" then all replays are visible... But you can't run them When starting pyrogenesis with "--replay-visual=" it's possible to run them. -

Vulkan - new graphics API

phosit replied to vladislavbelov's topic in Game Development & Technical Discussion

I also got some errors. I just atach the interesting log. interestinglog.html Isn't there a bether solution than asking the users to report all missing shaders? Surely it will not be tested exhaustively and some shader will be missing in the release. -

Manifest against threading

phosit replied to phosit's topic in Game Development & Technical Discussion

I might have formulated it a bit to harsh. We should still use multithreading but for now (for me) making the game more cache-frently has higher priority. When i wrote that i didn't know that each core has there own Prefetcher Yes, don't optimize prematurely. But what is an optimization‽ I use reduce over fold when possible. For some people that's already an optimization. Yes, Manual prefetching is hard... I think... never done it. I don't say we should do it manualy but we should design the datastructure in a way that the compiler / the cpu can do it. I don't think that the data-access-pattern will be remembered after a frame. The linked-list is not the only datastructure which does get iterated / has to be remembered and the linked-list might be mutated. No, the compiler doesn't do that much devirtualization (we haven't enabled LTO). Which also prevents furture optimization. If the v-table is in the cache it does use some valuable cache space (that's bad to my understanding). Aslo when i profiled many cpu-cycles are "lost" at virtual function calls. I would like to talk further with you and get some tips how to profile but that's easier on IRC. Feel free to join -

COMPILER_FENCE uses deprecated function

phosit replied to deleualex's topic in Game Development & Technical Discussion

I made a patch which does remove `COMPILER_FENCE` in some places: D4790 -

Manifest against threading

phosit replied to phosit's topic in Game Development & Technical Discussion

Interesting... I thought the 64-bit executable will be slower, since pointers are bigger. Apparently more register and the use of mingw outweight that. -

Don't take the title seriously. What i am writing is my view and not that from the WFG-team. I try to speed up the engine and make it less stuttery. First I was locking at multi-threading. Since "if one core is to slow multiple will be faster" right... In the most recently uploaded CppCon-presentations about Hardware Efficiency and General Optimizations this statements were made: "Computationaly-bound algorithms can be speeded up by using multiple threads" and "On modern hardware applications are mostly memory-bound". It seems impossible or hard to speed up an application using multiple threads. But step by step: Computationaly bound is when the speed of an application is bound by the speed of computation. Faster computation does not always mean faster application. A webbrowser is likely network bound: A faster CPU does not improve the application speed by much but improfing the network speed does (It can also split up in latency-bound and throuput-bound). An application can be bound by many other things: GPU-bound, storage-bound, memory-bound, user-bound An application is fast if no bound does outweight the others. In the last decades CPUs got smaller (smaller is important since that reducec latency) and faster. -> Computationaly bound application became faster. Memory also got faster but is spatially still "far" away from the CPU. -> Memory bound application became not so much faster. There is some logic that can help us: We have to bring the data (normaly inside the memory) spatially near to the CPU or inside it. That's a cache. If the CPU requests data from the memory the data will also be stored inside the cache. The next time it is requested we do not request it from the memory. Exploit: Use data from the cache as much as possible. More practicaly: Visit the data only once per <something>. Lets say you develop a game every entity has to update their position and health every turn. You should visit every entity once: "for each entity {update position and update health}" When you update health the data of entity is already in cache. If you do "for each entity {update position} for each entity {update health}" at the start of the second for-loop the first entity will not be in cache anymore (if the cache is not big enough to store all entitys) and another (slow) load/fetch from memory is required. If the CPU fetches data from the memory most of the times it needs an "object" which is bigger than a byte. So the memory sends not only a byte to the cache but also tha data around it. That's called a cacheline. A cacheline is typicaly 64 bytes. Exploit: Place data which is accessed together in the same cache line. If multiple entitys are packed inside the same cacheline you have to do only one fetch to get all entitys in a their. Optimaly the entitys are stored consecutive. If that is not possible an allocator can be used. The yob of an allocator is to place data(entitys) in some smart spot (the same cache line). Allocator can yield gread speed improvement: D4718. Adding "consecutivenes" to an already allocator aware container does also yield a small speed improvement: D4843 Now and then data has to be fetched from the memory. But it would be ideal if the fetch can be started asyncronosly the CPU would invoke the "fetcher"; run some other stuff and the access the prefetched data. Exploit: The compiler should know which data it has to fetch "long" before it is accessed. In an "std::list" one element does store the pointer to the next element. The first element can be prefetched but to get the second element prefetch can not be used. Which data to load can not be determined bevore the frist fetch. Creating a fetch-chain were each fetch depend on a previous fetch. Thus iterating a "std::list" is slower than an "std::vector". Exploit2: Don't use virtual functions they involve a fetch-chain of three fetches. A normal function call does only involve one fetch which can be prefetched and might even be inlined. Back to threading In a single threaded application you might get away with the memory-boundnes. Since big parts of the application fit in to the cache. Using multiple threads each accecing a different part they still have to share the cache and the fetcher-logic(I didn't verify that with measure). The pathfinder is not memory bound (that i did measur) so it yields an improvement. Sometimes i read pyrogenesis is not fast because it is mostly single thraded. I don't think that is true. Yes, threading more part of the engine will yieald a speed up but in the long run reducing memory bound should have bigger priority than using multiple threads. There also might be some synergy between them.

-

Message System / Simulation2

phosit replied to phosit's topic in Game Development & Technical Discussion

That's not what I ment. The messages are still sent via C++ to some JS-entity class that class then sends the message to its component. -

Message System / Simulation2

phosit replied to phosit's topic in Game Development & Technical Discussion

How about sending a message to a entity instead of a component and the JS-entity dispatches to its component? then there would only be one c++ -> JS comunication per entity.