-

Posts

194 -

Joined

-

Last visited

-

Days Won

4

Everything posted by jonbaer

-

Using AI (Articial Intellegence) to Get Gameplay Answers?

jonbaer replied to Thales's topic in General Discussion

I wanted to also point out something else, when you take a given output the first time and apply it much of it looks and seems "hallucinated" but that is really expected because they are random starting points and they don't adjust until many hours of gameplay later, it is that updating bit where you need "memory" (what a model really represents) - the "RAG[1] of 0AD" if you want to call it that, ie: [1] - https://en.wikipedia.org/wiki/Retrieval-augmented_generation -

Using AI (Articial Intellegence) to Get Gameplay Answers?

jonbaer replied to Thales's topic in General Discussion

You really have to define what a "winning" idea in AI is, for me it is probably what I like to call a "stable equilibrium" in the game. I will give an example but I still think multimodal AI (ie, image in, data (actions) out) is very much possible. If I start a game off w/ 8 AI, procedural map, nomad mode, population 300, etc. and get to a point in the game where there is balanced play among a group of civs and they are not dying out, allies are made, etc. I find a game where it shows the "AI" made good decisions based on resources @ hand (and maybe understand mistakes, this is the bit current LLMs can not do, w/o infinite memory, etc.). This type of setup is long and drawn out and not for everyone but doing these types of simulation games is really the setup I think the game can accomplish. You are right it's all currently based off training from the internet and "hallucinates" like nuts but it's the instruction/memory/recall I think you can do (albeit I wish it was with a more slimmed down version of the game). A base example would be the question of "what's the best location to place a Civ Center (ie: base)?" ... your own analysis from previous gameplay might be to place it close to a coast or within equal distance of stone/metal repositories (for late game) or near food/wood (for early game), etc. Or has is hard coded in there build a dock first (would most people understand this or more importantly would "AI" have figured it out) ... Almost anything from https://github.com/0ad/0ad/blob/master/binaries/data/mods/public/simulation/ai/petra/startingStrategy.js Other items for AI, best spot for marketplace, best spot for a dock (even applying more AI to the tradeManager, etc.) ... so many small hooks/traces you can plug into headquarters.js ... once again I don't think the goal is to create an unbeatable AI, but to model the game and make (and explain) better decisions. YMMV. -

Using AI (Articial Intellegence) to Get Gameplay Answers?

jonbaer replied to Thales's topic in General Discussion

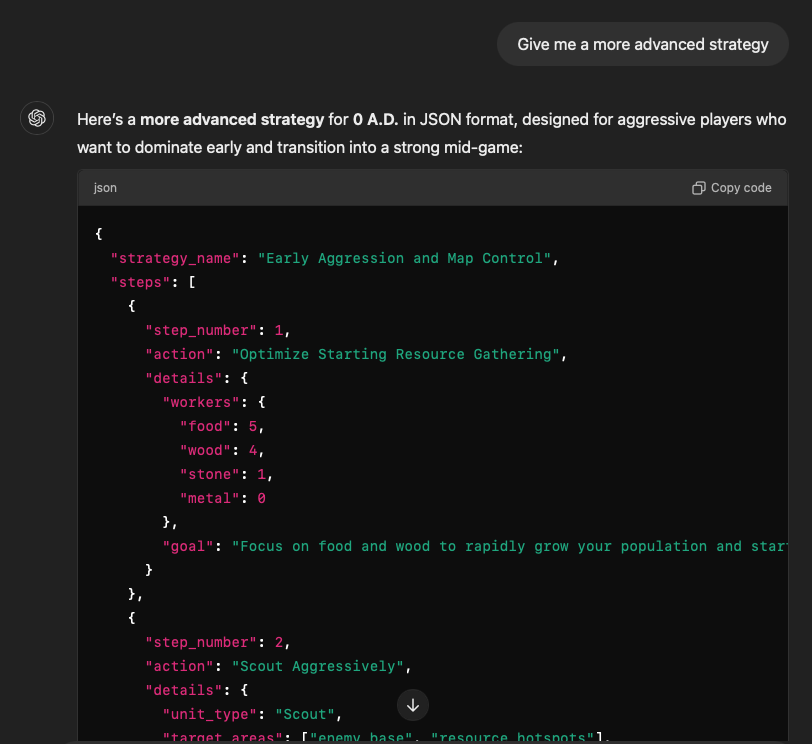

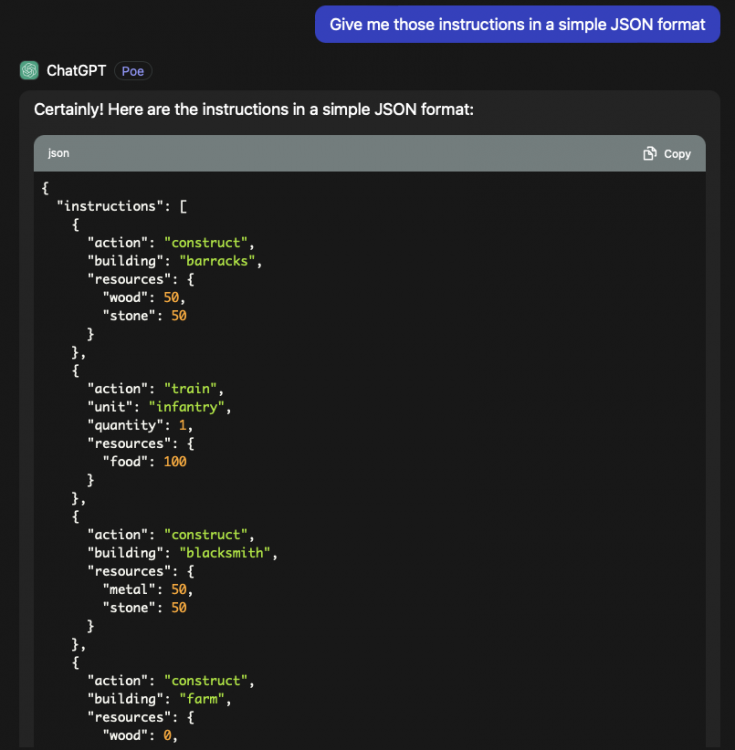

Actually, the real key (I think) w/ this game in general is to plug some type of LLM that understands the game state (from the discovered minimap) and append it to all of Petra AI managers @ https://github.com/0ad/0ad/tree/master/binaries/data/mods/public/simulation/ai/petra I studied this for a long time and had a working copy w/ Hannibal at https://github.com/0ad4ai/ Basically (from high level) on every tick you are asking each "manager", look at this photo (overhead minimap) and tell me best values for next action, but there are certain strategies that involve more cycles at every tick so you have to wait and it never makes a move or takes too long (ie "thinking"). I have a lot of respect for developers of Petra/Hannibal and got hung up on really complex questions like what you posted, especially when it came to AI to AI in https://github.com/0ad/0ad/blob/master/binaries/data/mods/public/simulation/ai/petra/diplomacyManager.js ("should I ally/neutral/enemy with you?") I think what is fascinating is that the LLM (either OpenAI/Gemini) do have military strategies baked in (from reading nearly everything) and understand 0AD manual/game setup. The trick is just formatting it back from that API to actions (maybe w/ the RL ports). I am sure Starcraft2 RTS AI folks do this all the time but I find 0AD resources much simpler to work with. -

Man was so long ago I don't even think I remembered my own logic to that patch :-) The ticket says "disappointed" jonbaer but I don't think I was disappointed - this patch also doesn't really solve a Save Map option, it's just to restart the game with the same game attributes. I don't know if it shuts down everything properly as I remember the audio falling out after a handful of restarts. I do remember playing around w/ pyrogenesis in tests ... https://github.com/0ad/0ad/blob/master/binaries/system/readme.txt -autostart="TYPEDIR/MAPNAME" enables autostart and sets MAPNAME; TYPEDIR is skirmishes, scenarios, or random -autostart-seed=SEED sets randomization seed value (default 0, use -1 for random)

-

The other (small) annoying thing with Random maps is there is no way to grab the seed. For example if you end up in a procedural map that you very much enjoy it's a bit of a pain to dig down to find the seed (and no real way to regenerate). This is not a very common case - but I think at one point I recommended you should be able to "Save Game" (save game state) and also "Save Map" (save map setup).

-

If you select say Random/Archipelago a seed is dropped + it has to be extracted. You have to hack around (both to get the seed + setup a new game save w/ it for another round).

-

Well there is a race @ the moment to quantize and make them small enough but I agree ... you would get just as much joy storing random text in a mod. I think I found a couple of random seeds for my map that I think can work for my original objective. If I had a feature request here it would be to freeze/label the seed as a new map somehow (sorry if this is already possible)

-

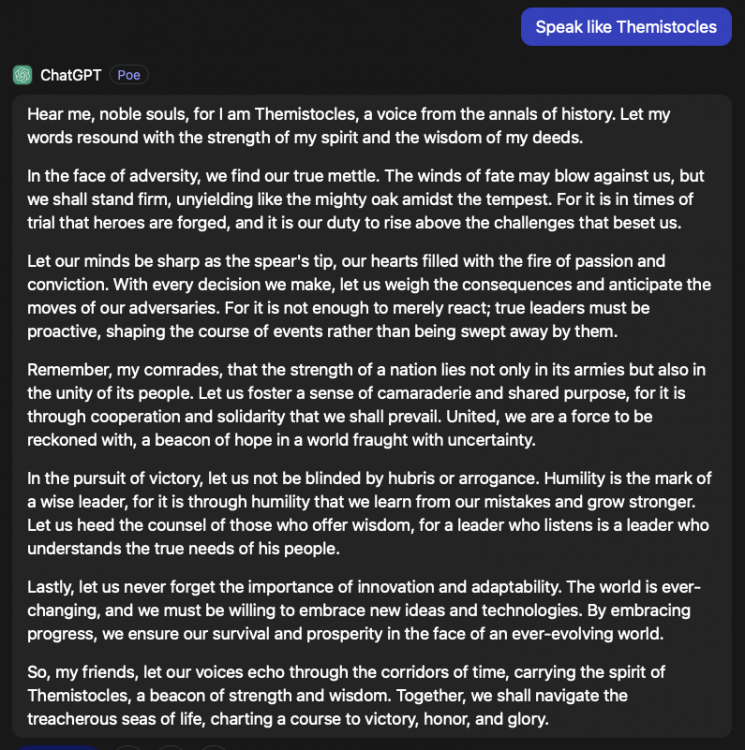

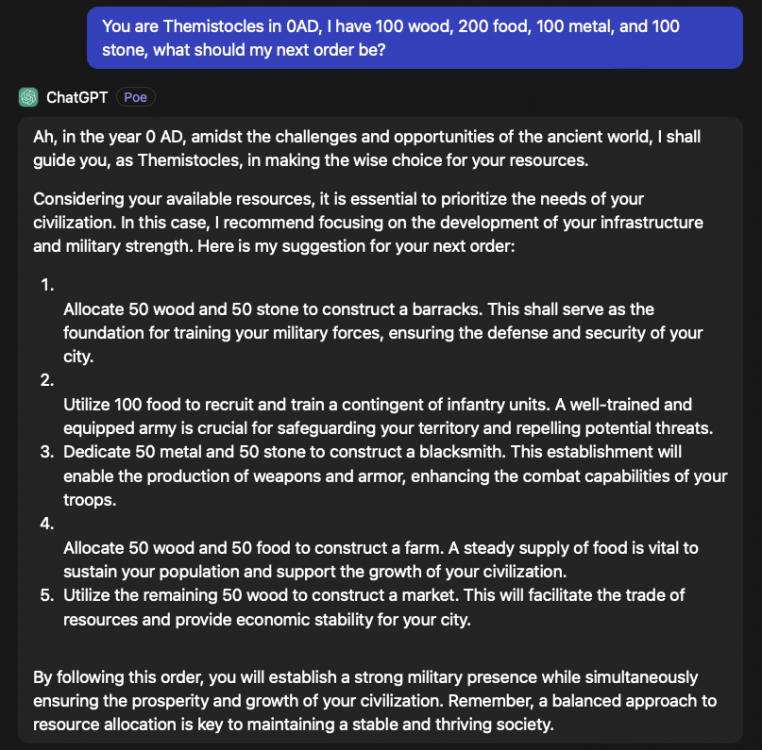

The LLM is a full time hallucinating machine :-) it was meant to be a (half) a joke. However I think at some point you can feed (or "fine-tune") it with a more in-depth version of a game manual and have it work pretty decent (definitely not as fast as what might be required for RTS) but more as a critic for moves maybe. I recently read Sony would adjust settings in realtime (https://insider-gaming.com/sony-patent-game-difficulty/) which seems a bit (odd) interesting (I thought tons of games already did this). It would be actually kinda cool if say you did spawn a Themistocles or hero you got a chat/hint message from them on your gameplay if human vs. AI ... but that is future talk on running locally or remote (ie OpenAI API, etc.) - who knows maybe that day is sooner than realized :-\

-

I don't really know how well this works in principle, I mainly took it originally that Petra should be moved out into it's own mod w/o public and nearly all the managers should be exposed to zero_ad.py (my main focus was https://github.com/0ad/0ad/blob/master/binaries/data/mods/public/simulation/ai/petra/tradeManager.js#L587) but since multimodal LLMs can make judgements on strategies from gamestates/minimaps/Atari(like) bitmaps it might make sense to query it once for general strategy and then as the game progresses check for changes in strategy (using the LLM is a bit of a cheat/hack + no way of knowing if you get the best strategy). It would be a little like say reading all books on Themistocles strategies + presenting state (or minimap) for next best set of build orders or something, ie "He persuaded the Athenians to invest their income from silver mines in a war fleet of 200 Triremes." ... but then again looking through some of the managers quite a few methods have already really been somewhat optimized (and LLM would probably ruin some things). I think I looked @ https://github.com/0ad/0ad/blob/master/binaries/data/mods/public/simulation/ai/petra/startingStrategy.js#L207 before as well. (Also bear in mind my game + map setup is always the same + conditioned for this so it doesn't apply to the overall game) and swap to MicroRTS for a "lighter version of 0AD", https://github.com/Farama-Foundation/MicroRTS-Py ...

-

I realize this might sound like a crazy thought, but I realized there was an "encyclopedia" being created/generated @ https://github.com/TheShadowOfHassen/0-ad-history-encyclopedia-mod ... imagine using some of that as the base of behavior for some civs and leaders. The thing about current LLM states are just that they already include game/military/economic strategy from training data, fine tuning on that information would be an amazing feat if you could replay it back. Nowhere near that point but thinking out loud.

-

Well (in a general sense), the current overall global behavior is really locked and not based on anything, for example here: // Petra usually uses the continuous values of personality.aggressive and personality.defensive // to define its behavior according to personality. But when discontinuous behavior is needed, // it uses the following personalityCut which should be set such that: // behavior="aggressive" => personality.aggressive > personalityCut.strong && // personality.defensive < personalityCut.weak // and inversely for behavior="defensive" this.personalityCut = { "weak": 0.3, "medium": 0.5, "strong": 0.7 }; Getting the behavior to decide on actions based on overall game state, an LLM given some objective should be able to decide + use random choice (when it sees the entire board/minimap/ally info). Should be clear I am not using an LLM to "play the game", just logging decision points @ the moment.

-

Was trying to adjust the Archipelago random seed to get something similar but can't come close, wasn't there a project a while back to produce maps based on supercontinents (it may not have been this game), looking for the layout close to https://en.wikipedia.org/wiki/Pangaea_Proxima This map is primarily for 8 AIs (controlled via random LLM strategies) so doesn't have to be perfect, more for research.

-

Trying to fix this myself, there seems to be a case if you play vs. multiple AIs where the diplomacy for ally/neutral/enemy only changes once w/ a preset condition and then stays there. It would be nice to make this smarter and more challenging somehow. Is there even any docs/posts which discuss how this is suppose to work? At the moment I am playing 1 vs. 5 AIs where I will make decisions on which ally makes economic sense but it would be great if there was a decision maker on the other side in the game. Hope this makes sense. https://github.com/0ad/0ad/blob/master/binaries/data/mods/public/simulation/ai/petra/diplomacyManager.js

-

I have only used it + compiled on OSX and Linux, when you say "it" does that mean it won't compile or the python client won't work/do anything on Windows, is there an error trace somewhere. This is tough because obviously the game itself is (without doubt) rendered beautifully on Windows/GPUs/gaming rigs but I think this bit (RL/ML/AI) is really being done more in Linux/parallel. I come in peace and hope the two camps can work together :-). I guess leaving this as a compile-time option is the only way forward. I was hoping somehow as something through the mod lobby too.

-

I will try, I think the major issue here is probably along the lines of versioning the protobuf builds at some point and I don't know how that works. Like maybe zero_ad is a pip installed library with a version inline with the subversion id or the Alpha 23 version, etc. Everything else (beyond what is inside of main.cpp) can just be really built on as default. In other words just let python have the ability to talk to pyrogenesis and push everything else to another repo. I think there should be (or probably is) already someway to define a client/server mismatch. I don't think @irishninja needs the main build to include the clients, I could be wrong or this has probably been discussed but I didn't see it yet.

-

I was able to fix my errors by pip3 install --upgrade tensorboardX + playing around with the zero_ad py client has been fun. One thing I'd like to figure out (this might already exist or I just don't know if it is something which can be accomplished), would be for the python client to actually inject a way to overwrite some of the JS prototypes. I will give an example, in Petra on the tradeManager it will link back to HQ for something like this: m.HQ.prototype.findMarketLocation = function(gameState, template) To me this is a decision making ability I feel like RL would be pretty well suited for (I could be wrong), but I feel like having ways to optimize your market in game (with say a Wonder game mode) on a random map would be a great RL accomplishment ... especially you would get bonus points to work around enemies + allies. Sorry I have always been fascinated w/ that function of the game, kudos to whoever wrote it. There are occasions where this AI makes some serious mistakes on not identifying chokepoints/narrow water routes, etc. But to me @ least it's an important part of the game that pre simulating or making that part of the AI smarter would be key. Also will D2199 make it into master copy? I can't seem to locate where that decision was(n't) made ... thanks.

-

There are still some minor small issues but I got it running, I had to directly install rllib (pip3 install ray[rllib]) + obviously forgot to install the map(s) first time around :-\ It looks like I may not be running w/ the correct version of TF inside of Ray though since I get this from the logger ... AttributeError: 'SummaryWriter' object has no attribute 'flush' ... Traceback (most recent call last): File "/usr/local/lib/python3.7/site-packages/ray/tune/trial_runner.py", line 496, in _process_trial result, terminate=(decision == TrialScheduler.STOP)) File "/usr/local/lib/python3.7/site-packages/ray/tune/trial.py", line 434, in update_last_result self.result_logger.on_result(self.last_result) File "/usr/local/lib/python3.7/site-packages/ray/tune/logger.py", line 295, in on_result _logger.on_result(result) File "/usr/local/lib/python3.7/site-packages/ray/tune/logger.py", line 214, in on_result full_attr, value, global_step=step) File "/usr/local/lib/python3.7/site-packages/tensorboardX/writer.py", line 395, in add_histogram self.file_writer.add_summary(histogram(tag, values, bins), global_step, walltime) File "/usr/local/lib/python3.7/site-packages/tensorboardX/summary.py", line 142, in histogram hist = make_histogram(values.astype(float), bins) AttributeError: 'NoneType' object has no attribute 'astype' Is there something inside of ~/ray_results which would confirm a successful run? (I have not used it much yet but will read over the docs this week).

-

Thank you, was able to build this fork and have it running now, I am currently on OSX w/ no GPU @ the moment (usually anything I require GPU for I use Colab or Gradient @ the moment) ... I wasn't able to run PPO_CavalryVsInfantry because of what looked like Ray problems but will figure out.

-

Hmm ... I still get ... Premake args: --with-rlinterface --atlas Error: invalid option 'with-rlinterface' ERROR: Premake failed I don't see it in premake5.lua @ all ... https://github.com/0ad/0ad/blob/master/build/premake/premake5.lua There is only a master branch there right? Edit: Sorry or just to be clear, I should apply the D2199 diff if I want that option? I meant I just did not see it in my latest git pull anywhere.

-

I think I really missed it but what is the status of https://code.wildfiregames.com/D2199 (?) ... I don't seem to be able to locate newoption { trigger = "with-rlinterface", description = "Enable RPC interface for reinforcement learning" } in my git copy which I am building from.

-

I actually started a small repo area a while back (after I found the Hannibal 0AD bot), https://github.com/0ad4ai ... but I think the problem he had was that releases were moving so quickly it was hard to nail down a solid externalized interface. I think the minigames are a great place to start but I tend to think I have my ideas broken up to be too narrow (market production for example), many of these can obviously be related to techniques already in Starcraft play, resource management. I did not bail on these ideas but I rather found a "simpler" version easier to work w/ so I have a built copy which is smaller, but even then I moved onto MicroRTS for quicker implementation of ideas, https://github.com/santiontanon/microrts ... there is an OpenAI gym for it but likewise there issues always seems to be across what format to write data out to (binary vs. JSON) especially when your state is quite large. Either way I would love to see an 0AD-gym be available @ some point. It's just hard to say if it justifies pulling down and using the entire game or just say 1 map + 2/3 civs, etc.

-

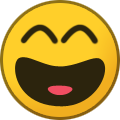

Would there happen to be anyone here who could put together / design this map layout? (It is basically the Arctic circle w/ the Northern Sea Route open). https://en.wikipedia.org/wiki/Northern_Sea_Route

-

Wasn't there someone already doing nightly builds from git repo already or just my imagination?