Search the Community

Showing results for tags 'code coverage'.

-

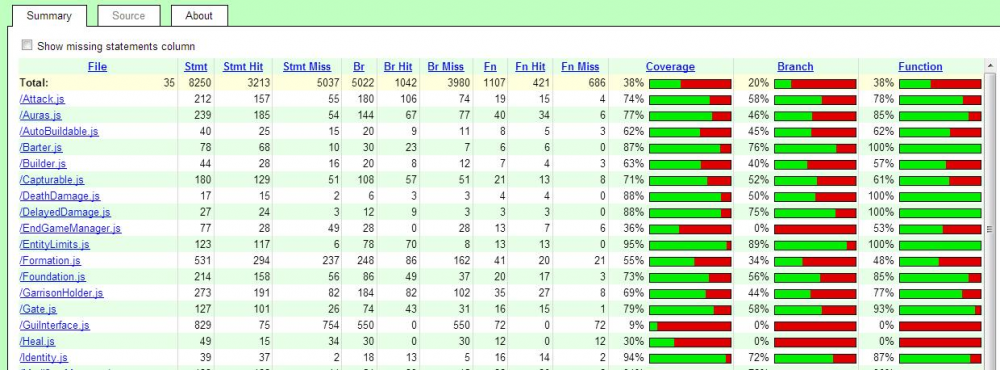

While discussing a quirk of the common AI API on IRC Stan suggested to take a code coverage measurement on the existent simulation components, since I had the toolchain already set up for AI development. I took the challenge and now present first results. However, I decided to not attach this to the already running jasmine-thread since I consider it an independent topic. To reproduce the measurements: Attached to this posting is a zip archive. Unzip it to binaries/data/mods/public/simulation. A new directory CoverageMeasurement will show up. Inside that directory, the subdirectory instrumented already contains the results of an instrumented run. Load the jscoverage.html file into a fully-scripting-enabled browser to see the results. They will look somewhat like this: To rerun the analysis, launch the runcomponenttests.sh shell script from the CoverageMeasurement directory. That script requires that the zip archive has been unpacked at the particular destination and 0AD fully compiled (SpiderMonkey shell is compiled via the update-workspaces.sh script). Observations during analysis: The test scripts are normally run from the pyrogenesis test script file test_scripts.h via the cxxtest subsystem. This means the scripts can enjoy the full Pyrogenesis environment, which is not available when runcomponenttests.sh runs the test scripts via SpiderMonkeyShell. For example, I had to manually define all interface identifiers IID_XXX in the jscover-driver.js file which contains the actual 0AD-specific analysis logic. Since I just faked the missing Engine functions, the logic of some test cases might not work, giving improper coverage results. Still, I found some component methods which seem to be not tested. My homebrew driver script runs all test scripts in the same JS environment without resetting the global scope. This caused SpiderMonkey errors when const values were redefined. To overcome this, the shell script wraps the content of each test script into a "(function () { test script })()"; encapsulation using an sed script. Some test scripts still caused errors and I resorted to skipping them for the first draft. Error analysis seemed too cumbersome when it is unclear whether this approach is feasible at all. The skipped test cases are marked in the CoverageMeasurement/jscover-driver.js file. Code coverage measurements are taken via the JSCover tool (not the most recent version). This tool is originally intended for web development and so relies on running a JSCover server in the background, while browsing the reports. I hacked the JSCover main script to allow browsing the results without the server running, as this was needed to integrate the JSCover results into a JSDoc documentation site. The hacked jscoverage.js script is found in the CoverageMeasurement/JSCover directory. Any comments or questions welcome. CoverageMeasurement.zip

-

- 3

-

-

-

- code coverage

- javascript

-

(and 2 more)

Tagged with: