Teiresias

Community Members-

Posts

32 -

Joined

-

Last visited

-

Days Won

2

Teiresias last won the day on September 26 2020

Teiresias had the most liked content!

Teiresias's Achievements

Discens (2/14)

18

Reputation

-

A way of unit-testing AI code using jasmine

Teiresias replied to Teiresias's topic in Game Development & Technical Discussion

Itms, thanks for the "padding on the shoulder". I admit I might be a bit extreme regarding testing, since I partially do this for a living. Regarding your question I'm afraid, no. When writing jasmine tests I am in a JS-only world. Since a unit test is about testing the smallest isolatable parts of a software system - usually a single function or a class - this is not a problem for me. But if you intent to include the Pyrogenesis engine activities together with the JS code - that's actually an integration test, and usually harder to acchieve than unit testing. I don't know any off-the-shelf solution, in particular if multiple runtime environments are involved (native code vs. SpiderMonkey JS environment). In my AI experiments I faced similar problems with the common AI and currently use two approaches: Include the common AI code in the JS space where the jasmine tests execute. Since they are both JS this is possible. However, I still try to avoid this as much as possible since it introduces an external dependency. Lift up to system test level, i.e. run the whole Pyrogenesis executable in autoplay mode with special script files to generate a scenario map tailored for testing and evaluating the AI behavior. This is a very cumbersome method and I try and avoid it whereever possible. No JSCover analysis done with this method. -

Questions on AI API3 template handling

Teiresias replied to Teiresias's topic in Game Development & Technical Discussion

In my AI scripting experiments I also use negative values to indicate "this resource amount still has to be gathered to do that" (not compliant with a schema but still working fine). -

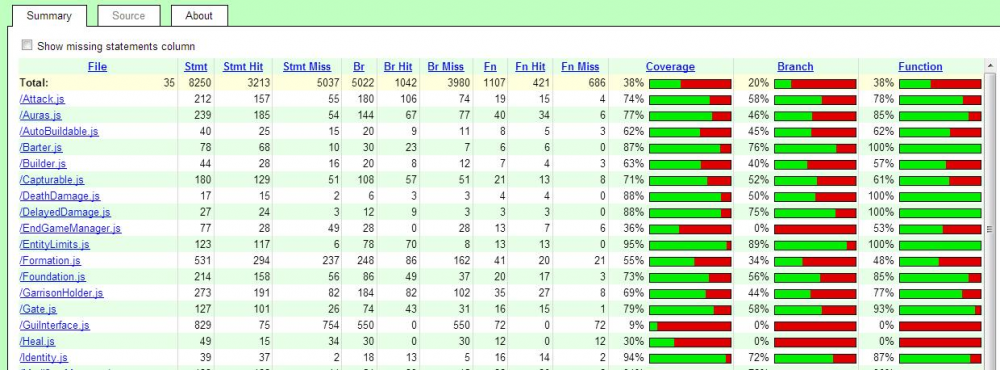

While discussing a quirk of the common AI API on IRC Stan suggested to take a code coverage measurement on the existent simulation components, since I had the toolchain already set up for AI development. I took the challenge and now present first results. However, I decided to not attach this to the already running jasmine-thread since I consider it an independent topic. To reproduce the measurements: Attached to this posting is a zip archive. Unzip it to binaries/data/mods/public/simulation. A new directory CoverageMeasurement will show up. Inside that directory, the subdirectory instrumented already contains the results of an instrumented run. Load the jscoverage.html file into a fully-scripting-enabled browser to see the results. They will look somewhat like this: To rerun the analysis, launch the runcomponenttests.sh shell script from the CoverageMeasurement directory. That script requires that the zip archive has been unpacked at the particular destination and 0AD fully compiled (SpiderMonkey shell is compiled via the update-workspaces.sh script). Observations during analysis: The test scripts are normally run from the pyrogenesis test script file test_scripts.h via the cxxtest subsystem. This means the scripts can enjoy the full Pyrogenesis environment, which is not available when runcomponenttests.sh runs the test scripts via SpiderMonkeyShell. For example, I had to manually define all interface identifiers IID_XXX in the jscover-driver.js file which contains the actual 0AD-specific analysis logic. Since I just faked the missing Engine functions, the logic of some test cases might not work, giving improper coverage results. Still, I found some component methods which seem to be not tested. My homebrew driver script runs all test scripts in the same JS environment without resetting the global scope. This caused SpiderMonkey errors when const values were redefined. To overcome this, the shell script wraps the content of each test script into a "(function () { test script })()"; encapsulation using an sed script. Some test scripts still caused errors and I resorted to skipping them for the first draft. Error analysis seemed too cumbersome when it is unclear whether this approach is feasible at all. The skipped test cases are marked in the CoverageMeasurement/jscover-driver.js file. Code coverage measurements are taken via the JSCover tool (not the most recent version). This tool is originally intended for web development and so relies on running a JSCover server in the background, while browsing the reports. I hacked the JSCover main script to allow browsing the results without the server running, as this was needed to integrate the JSCover results into a JSDoc documentation site. The hacked jscoverage.js script is found in the CoverageMeasurement/JSCover directory. Any comments or questions welcome. CoverageMeasurement.zip

-

- 3

-

-

-

- code coverage

- javascript

-

(and 2 more)

Tagged with:

-

In yesterdays IRC I mentioned about using jasmine tests for AI development and the topic was considered as maybe of interest to others. So I hereby provide a trimmed-down demonstrator on how a test suite can be set up. The attached zip archive contains a copy of the API3 AI high-level interface with two test cases (one of them discovered the problem discussed here), plus the necessary infrastructure (jasmine 3.6.0 release and driver html page). To execute the test cases, just extract the zip content to some directory and load the common-api/jasmine-runner.html file into a scriptable webbrowser. Said html file is commented to show how the jasmine framework, the API3 under test and the test files interact. Items to consider: For the demo, I used the official jasmine standalone release which contains a version number in its path. In "production use" I rename the directories to get rid of the version number so no changes are needed when upgrading jasmine. It is also possible to execute the unit tests via the SpiderMonkey js shell by loading all of the scripts and running the jasmine bootstrap code. I also managed to connect the jasmine test suite to the JSCover code-coverage measurement tool, but this requires a more complicated setup, i.e. a list of all source and test case js files plus a platform-dependent batch job and a hacked-up JSCover driver js script. I can provide a demonstration if there is interest. Any comments welcome. jasmine-demo.zip

-

Questions on AI API3 template handling

Teiresias replied to Teiresias's topic in Game Development & Technical Discussion

Thank you for the explanation. Your assumption may still hold, as I constructed the raw template data programatically (similar to the demonstrator in the first post) and this way bypassed the schema at all. I can live with it, I was just wondering if I am using the API3.Template class in wrong matter - before constructing a load of code doing so. -

I tried playing around with the AI API3 and set up my own entity template. Thereby I noticed an interesting behavior of the API3.Template.cost() function. I extracted a demonstrator for it: If you execute the following snippet (function (){ var rawTemplate = { "Cost" : { "Resources" : { "food" : 1, //"wood" : 2, "stone" : 0, "metal" : 4 } } }; var fakeSharedAI = { "_templatesModifications" : {} }; var testee = new API3.Template( fakeSharedAI, "someTemplate", rawTemplate); warn("Costs are " + uneval(testee.cost())); })(); in the content of an AI script (e.g. inject it into petras start-up sequence), the 0AD log receives the following entry: Note the NaN in the second property. So it seems template instantiation accepts skipped resource definitions gracefully, but I do not understand the behavior on "stone":0. Am I doing something wrong? I imagined something like "{food:1, stone:0, metal:4}" to build up.

-

Hi stanislas69, i have already tried introducing JsDoc some time ago with focus on the AIs and we had a discussion about it (see https://wildfiregames.com/forum/index.php?/topic/19488-proposal-enhance-common-api-with-documentation/). At that time a big obstacle discovered was that JsDoc used a different JavaScript interpreter than 0AD itself: Rhino vs. SpiderMonkey. I don't know whether this has changed by now, but at that time it seemed like the 0AD JavaScript code was compatible only with 0AD itself, as SpiderMonkey seems to be the spearhead of JS development. Any separate tool using a different analyzer may introduce troubles. Quoting mimo: If it has JS compatibility issues which prevent us from using some JS features that we would want to use, that's a very strong point against its use. What is your concept for handling this problem? (Sorry for the less-than-optimal layout - my browser has severe problems with the new forum editor which i have not resolved yet).

-

Any start point for AI development?

Teiresias replied to analca3's topic in Game Development & Technical Discussion

The question of getting involved with AI development comes up from time to time. General consensus seems to be that indeed you start by digging through the sources and modify the existant bot to your needs. You say you "need to do a BIG BIG work with AI" - is this a master thesis or similar? If so, i suggest to check with your mentor before starting: The AI API is not stable and requires adjustment of the bot script code from time to time. See here. If you are doing a thesis work with a deadline, it may be advisable to check out and freeze a specific revision to prevent your module from getting broken at an undesirable time. Will your work have to become part of the official code base to be accepted in studies? If so, approach #1 is probably not feasible.Regarding the non-existant documentation, this has been discussed before - and it seems consensus that the difficulties caused by having to "read the code" are neglectible compared to building up your own concepts. The ultimate goal of an AI is to give the user (player) a challenging and fun experience. This is more of passing the turing test than building algorithms/structures. Besides the tutorial already mentioned, additional information may be gathered in the forums by searching for "AI", "petra", "aegis", "jubot" etc. -

An event in Paris about 0ad to find contributors

Teiresias replied to lexa's topic in General Discussion

lexa: JuKu96 has created a tutorial on bot development some time ago. Additionally, you may check the forum topics tagged "AI", but afaik not all relevant threads have been tagged. Seconding sanderd17, the AIs of 0AD evolved gradually from the ancient "testbot". It was implemented as a demo by the one who implemented the original AI interface into the engine. To quote sanderd17 from #0ad: 21:52 < sanderd17_> The first AI was only meant as a test IIRC, but sadly, it kept growing bigger, until it became a monster. Friendly spoken, the AI is not designed but grown. So, there seem only few high-level designs/planning documents available. I doubt the AI<->engine concept will be changed at a whole in the forseeable future, but i recommend you to practice some hours on your own before giving the event. Regarding your planning, two times two hours might prove a bit short in time. You might want to chat to mimo about a good "place to enter". Another option is to fetch a copy of testbot/jubot from an earlier SVN version and adapt it to the new API, to use as a "drosophila". -

Request for Comments: Hannibal Group Scripts

Teiresias replied to agentx's topic in Game Development & Technical Discussion

I think there are two fundamental improvements in your concept: Regarding performance, the event-driven approach is probably far superior to any polling system based on entity collections, as supplied with the current API. If driven far enough, maybe you could do away with the BaseAI.handleMessage() alltogether. IIRC that function has been identified as a performance dropper in some forum discussions. Your DSL nicely abstracts off the tedious details of reading templates, isolating entities etc., which tend to clutter up the JavaScript code of the current bots (including mine).Generally, your DSL and event system implement an expert system somewhat similar to what was used in AoK, but at an entity-level granularity. This is probably more suited to defining intelligent behavior than pure JS. Looks promising! PS. I meant SDK=Software Development Kit. I was not sure whether you will provide a complete set of scripts or whether Hannibal is just the pure "script runtime". -

Request for Comments: Hannibal Group Scripts

Teiresias replied to agentx's topic in Game Development & Technical Discussion

I think av93's question shows an interesting point, which seemed swinging along "under the hood" of many AI-bot related discussions: Shall we concentrate all efforts on one - *THE* - 0AD bot for extraordinary quality and glory, or are we going to look at/for a zoo of different bots and concepts, so the best concepts will eventually evolve? Personally i tend for the second option, but there seem quite some people in favor of the first one. @agentx: In your initial post, you state "because this what this bot is all about.". I get the impression Hannibal is more of a new "AI-bot SDK", than a bot. In your second post you say "Depends on the group script author. He/she may use:...". So will the bot behavior be hand-coded, or are you going to infer the group scripts from the triple store? -

at l(e)ast we have a larger discussion group now... I will try and answer all points of the previous comments: @feneur,mimo: I have choosen JsDoc because it seemed the best "Doxygen for JavaScript" tool available - there are not too many of them anyway. Of course, this concept introduces the dependency on the source code, and I had to learn the JS language changes much faster than do traditional programming languages. So this approach has its drawbacks. But, in my experience, a src->doc tool raises the chances of docs and src staying in sync, as both are "closer to each other". Separate documents tend to get neglected when the sources are updated, unless you have strict QA enforced. I even tend to write my designs into class/namespace overviews. @niektb,mimo: "struggled with basic tasks": I got a taste of it on my own today: In an attempt to write a quick-and-dirty driver for my defense system experiments, i attempted to use API3.Filters.byTerritory(), and failed to get it working. I found only one example of it in an elder aegis version, and that was not self-explaining. Finally, I considered it's faster to write my own version than debugging the existant one. @niektb: "unfortunately Aegis was difficult too": The elder testbot was simpler to understand, but has been dropped from the repository. @mimo: "improving the petra doc is in my plans, but I never find the time to do it": Based on my personal experience I can only recommend to write documentation immediately. Otherwise, you might never find the time once the task has grown real big. @agentx: "I've also thought of publishing here a minimal bot, (...) and you are knee deep in map analysis.": I can see that. Maybe this (c|sh)ould lead to a step-by-step tutorial which first uses a hard-coded bot on a hard-coded map, and then expands to more and more flexibility. "I agree with feneur that an invitation to AI devs needs more than a documentation.": I agree with you. I just stumbled on the problem to figure how to use some of the API functions and thought I could help to improve a bit here.

-

I digged a little deeper. Apparently, JsDoc uses a separate parser for reading .js files - currently, Rhino and Esprima can be used. None of them seems to support the for-of loop construct. With the Mozilla people marking these as experimental, the other JS parser/runtime implementors seem to wait on how the experiment turns out. It might be possible to patch Esprima's parseForStatement() function to accept the new style. I haven't checked that road: Judging by the results of this thread, noone is interested in a (Doxygen-like-)documentation of the bot API. Maybe the intended audience simply does not exist(?). So, i consider my proposal to be rejected.

-

@agentx: Trying out new ideas will still require knowledge of how to "read the game state/templates" and control the AI player's actionshow to fit in your idea into the current structure of the bot. For example, if you are preparing for an early mass attack with multiple barracks continually training, how to pre-set the economy to provide resources in-time.One can figure out all of this by just reading the sources, but it's probably becoming a challenge. @mimo: The compatibility issue is that JsDoc at the moment does not accept the following for-loop style: for (let id of data.ents) for (let [id, ent] of this._entities)I assume this is a new JS language feature introduced too recently for JsDoc to be already updated. At least, neither me nor any of my JS books knew of that style . If this is a problem, i may try and create a patch so the tool will accept that construct. Currently JsDoc reports a syntax error on these loops while parsing the sources. At two other points i went for a quick cut: Rewrote the API3.Template definition to not use the API3.Class({ ... }) constructor - it seems being phased out anyway. Removed the surrounding anonymous function constructs var API3 = function () { ... }(API3); from the source as they seem to have no effect but encomplicate namespace detection. I presume they are intended for closuring up global variables, but i have not seen any.At these two points, JsDoc accepts the source in its original shape but documenting it without these constructs was easier

.thumb.jpg.b85f1db9873287a0d10cd2c7e88579c0.jpg)